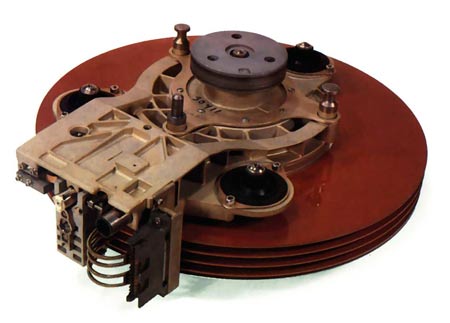

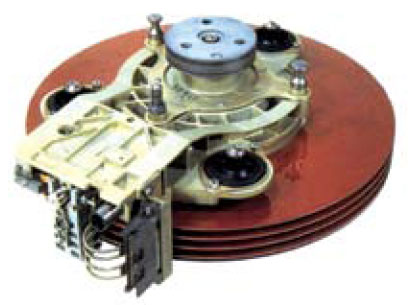

Who would have thought that a hard disk appeared almost 60 years ago. The first HDD was invented by IBM and was called IBM 350 and until that time there were no other prototypes of disks. The opportunity to purchase a hard disk appeared in September 1956 and was part of a new computer system 305 RAMAC. The disk consisted of fifty twenty-inch disks made of aluminum. Rotational speed hard drive was equal to 1200 rpm. The volume of this device, by today's standards, was really funny. In total, the disk contained 5 megabytes. But, despite this, engineers consider the IBM 350 to be a real technical breakthrough, since these disks, or rather one of them, could easily replace 62.5 thousand punch cards. In addition, the hard “screw” was much faster, because to get access to the necessary information, users needed fractions of a second, while using magnetic tapes it took several minutes to wait.

IBM Ramac 305 hard drive. A large number of drives

Ray Jones invented and manufactured these discs. In 1930, he worked as a teacher in a school and even then he invented a machine that could automatically read the tests that he distributed to his students. Later, IBM bought the hard drive, and invited Jones to his staff. So he retrained as an engineer at an IT company. In January 1952, Ray received offers to open a research laboratory in which he would be able to do his favorite business, new technologies. Just a month later, a talented engineer leased an entire building in San Jose. Moreover, he had global plans, because he rented it immediately for 5 years. He began to equip his laboratory and, at the same time, looked for employees and conducted interviews.

Three months later, there were already 30 employees in his laboratory. All of them were engaged in solving fascinating problems, among which were such projects as a device that allowed access to arbitrary information recorded on a punch card. Also, experts tried to make a matrix printer, special watches that could automatically mark the time when a person comes and leaves work. The idea of \u200b\u200bthe first hard drive, namely the use magnetic systems for storing information, it appeared during work with the improvement of punch cards. Specialists thought over everything and began to experiment with possible carriers, among which were tape rings, wires, rods, drums and much more. However, magnetic media was recognized as the best option, since it allowed to place more information, and thanks to rotation, access to the necessary information was very simple.

Later, in 1953, six professional engineers joined the Jones team. Previously, they worked at McDonnell Douglas and created a system for automatic data processing. In the same year, the US Air Force ordered a device that could provide simultaneous storage of a file cabinet, consisting of 50 thousand records. One of the main conditions was instant access to any of the records on the disks. But at that time, engineers had not yet decided on the material and technologies that were later used to create the IBM 350.

It took more than a year to resolve the issues, and in May 1955, IBM leadership for the first time announced the emergence of a new, revolutionary way of storing data, and in February 1956 the first hard disks went on sale.

Today's popularity hard drives great, although they are slowly becoming a thing of the past. The time of hard drives is coming to an end and they are being superseded by solid-state drives, faster and more reliable.

We already use various kinds of computers without “hard drives”: smartphones, tablets, laptops — any devices in which instead of boxes with rotating plates inside, drives based on flash memory chips are installed. And despite the fact that in terms of 1 GB solid-state drives are not yet able to compete in price with classic HDDs, the outcome of this confrontation seems to be predetermined: high speed, low power consumption, high resistance to mechanical stress, miniature - everything suggests that sooner or later SSD will finish the mechanics.

To understand how we got to this, let's see how the history of drives has evolved over the past 50 plus years.

The first IBM 350 hard drive Disk storage Unit was shown to the world on September 4, 1956. It was a huge cabinet 1.5 m wide, 1.7 m high, 0.74 m thick, weighed almost a ton and cost a fortune. Its spindle contained 50 24 ″ (61 cm) discs coated with paint containing ferromagnetic material. D

claims rotated at a speed of 1200 rpm, and the total amount of information stored on them was equal to fantastic at that time 4.4 Mb. The drive on which the heads were mounted weighed almost 1.5 kg, but it took him less than a second to move from the inner track of the upper disk to the inner track of the lower. Imagine how fast this not-so-easy mechanism was supposed to move.

Invented by a small team of engineers, the IBM 350 Disk Storage Unit was part of the IBM 305 RAMAC tube computing system. Such systems in the 50s and 60s were used exclusively in large corporations and government organizations. It is interesting that all the ideas embedded in the very first hard drive, which appeared back in the era of lamp computers, have survived to this day: in modern drives, the same set of disks coated with a ferromagnetic layer, onto which data tracks are written, and a block of read heads and records placed on the “arm” with an electromechanical drive. By the way, the idea of \u200b\u200bheads that rise above the surface of the disk due to the air flow created by the rotation of the disks themselves was also proposed by IBM engineers, and this happened back in 1961. And almost until the end of the 60s, everything related to hard drives, one way or another, came from IBM.

Disc race

In 1979, Alan Schugart, who previously worked at IBM and participated in the development of the IBM 350 Disk Storage Unit, announced the creation of Seagate Technology, and perhaps from that moment began the history of the hard drive as a mass product.

In the same 1979, Seagate created the first disk of the 5.25 ″ ST-506 form factor with a capacity of 5 MB, and a year later it was put into production. A year later, the 10-MB ST-412 was released. These discs were used in the legendary personal computers IBM PC / AT and IBM PC / XT.

Western Digital, which later became Seagate’s main competitor, was founded nine years earlier and was called General Digital Corporation (it was renamed in 1971, a year after its founding) at the time of its founding. She was engaged in the production of single-chip controllers and various electronics. The first Seagate ST-506 / ST-412 hard drive controller on a single chip in 1981 was made by Western Digital, and it was called WD1010. The next seven years, WD took part in the joint development of the ATA standard, was engaged in the development of chips for SCSI and ATA disks, and in 1988 acquired the disk division of Tandon Corporation and in 1990 introduced its own hard drives of the Caviar series. More details about this technique are discussed at the electronics forum - http://www.tehnari.ru/f30/.

In general, in the 20-year period, from 1985 to 2005, there was a real boom disk production, and a huge number of companies appeared, most of which by now have either become part of the main giants Seagate and Western Digital, or simply ceased to exist. Think of at least the well-known once-disc brands - Conner, Fuji, IBM, Quantum, Maxtor, Fujitsu, Hitachi, Toshiba, which have established themselves as manufacturers of good technology. One way or another, they all took part in the “disk race”, which started from the moment when the HDD became an integral part of a personal computer.

Parallel universe

Almost from the very beginning, computers used several different kinds memory, but only because a perfect storage device has not yet been invented. If you imagine that we were able to get chips that work as fast as RAM, non-volatile like flash, but with a large rewrite resource and such a volume as modern tough drives, then we would not need to divide this memory into separate devices. Each of the existing types of storage devices is imperfect, and in connection with the total miniaturization, hard drives are especially imperfect because of their mechanical nature. They came from the idea of \u200b\u200brelatively inexpensive to get a large amount of memory, and therefore, the initial requirements for other parameters, such as speed and reliability, somehow faded into the background. Therefore, it is not surprising that an alternative to HDD has always been sought.

Back in the 70-80s, attempts were repeatedly made to create solid state drives (Solid State Drive, SSD) based on dynamic memory, which were equipped with a special controller and battery in case of blackout. Then it was almost crazy projects, which cost a lot of money, and they received their embodiment exclusively in supercomputers (IBM, Cray) and in systems used for real-time data processing (for example, at seismic stations). Later, when the chip volumes random access memory significantly increased and their cost decreased, such drives appeared as solutions for personal computers (for example, the well-known i-RAM manufactured by Gigabyte), but still remained the lot of geeks, and did not receive mass distribution due to the relative high cost and small volume.

Another area of \u200b\u200bSSD was born from the idea of \u200b\u200bcreating a large-capacity EEPROM chip. The problem was that the recorded cells can be placed on the chip quite tightly, but if you need to not only record, but also erase and then record again, then you need a chain responsible for erasing, which greatly increases the size of the memory cell.

A solution to the situation in the early 80s was found by a scientist who worked at Toshiba, Dr. Fujio Masuoka. He proposed to cross two ways of erasing permanent memory cells, and instead of clearing the entire chip or, on the contrary, only one cell, erasing the memory with sufficiently large blocks. In 1984, Masuoka presented his development at the IEEE 1984 International Electron Devices Meeting (IEDM), and in 1989 at the International Solid-State Circuits Conference, Toshiba showed the developed NAND flash concept. Then, even in my wildest dreams, hardly anyone would have thought that a small chip with a complex data access scheme could compete with hard drives that were already gaining momentum.

Founded in the same 1989, the Israeli company M-Systems first began work on the idea of \u200b\u200ba flash drive, and in 1995 launched DiskOnChip, a single-chip drive. It had both flash memory and a controller. Moreover, this 8.16 and 32 MB single-chip disk already contained algorithms for monitoring cell wear and detecting and redistributing damaged blocks in its firmware. By the way, it was M-Systems in 1999, the first to release USB flash drives - DiskOnKey, and IBM will sign a contract with the company and will sell them in the United States under its own brand.

But in order for flash-based SSDs to become a mass product, it took about another 10 years. In 2006, Samsung, by then the largest manufacturer of memory chips, released the world's first laptop with a 32 GB SSD drive. Two years later, Apple showed the MacBook Air, which could optionally be installed SSD, and in 2010 this laptop was produced exclusively with solid state drives.

Modern SSDs certainly have flaws. Although, if you carefully understand, there are not so many of them: but by and large there is only one - the high cost of 1 GB compared to classic hard drives. But the semiconductor industry is developing very quickly, new types of memory are being developed, the algorithms of the controllers are being improved, the volumes are growing rapidly, and the cost is gradually decreasing. But that is not all.

There is another important argument due to which there is strong competition and prices quickly become attractive ease of manufacturing solid state drives. In fact, assembling an SSD is the same as assembling only a controller board for a hard drive, and all that is needed is an assembly line for surface mount boards. This, of course, is very simplistic, but generally true. Assembling a classic hard drive is a much more complicated process, which means it is expensive. That is why no one doubts that there are very few left until the moment when SSDs begin to actively supplant “hard drives”. The process has already begun.

50 years of hard drives!

IBM / Hitachi Commemorates Half-Century History of Whole Magnetic Storage Industry

About a year and a half ago, the IT community, led by Intel, celebrated the fortieth anniversary of the famous “Moore's Law,” which for many years ahead predicted the technological pace of development of the industry for creating semiconductor integrated circuits (memory, microprocessors, etc.) and to this day remains one of the cornerstones for those who drive the IT business.

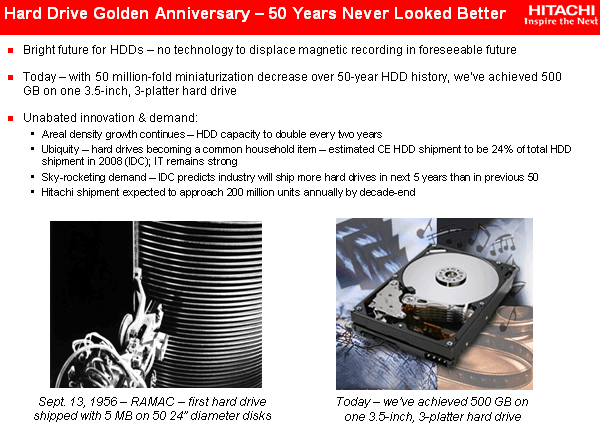

But today we have no less, and perhaps more significant reason for celebrating anniversaries: September 13, 1956, that is exactly half a century ago IBM introduced its first drive on hard magnetic drives (later these devices received the semi-official nickname "Winchester").

And this invention over time actually created a huge industry magnetic drives, without which no more or less powerful computing device is now unthinkable, since the vast majority of information that these computing devices operate on is stored on magnetic media.

In fact, more than half a century of hard drives magnetic disks steel the maina means of storage and operational output, the rapidly accumulated information by mankind, defeating both tape drives, and optical media, and semiconductor EPROMs / flash. And although the future of magnetic drives has been questioned more than once (due to various physical limitations that scientists each time managed to successfully overcome with the help of new effects and technologies), for the coming decades they will still remain in service due to the unique combinationsimportant consumer properties. And will magnetic drives survive their centennial anniversary, we will know very soon - after the next 50 years. ;)

And we remembered Moore’s law for a reason - the development of the magnetic storage industry has been going along with the semiconductor industry all these years. There is much more in common between them than it might seem at first glance. Even the rates of miniaturization (increase in the density of elements), “predetermined” by Moore’s law for microcircuits, are almost exactly the same for magnetic media information. Suffice it to say that the minimum sizes of magnetic recording bits on winchester plates are now (and over the past few years) the minimum sizes of silicon transistor elements in the most modern microprocessors and memory.

Interestingly, the first microcircuit, which predetermined a different, main branch of the development of the information and computer technology (ICT) industry, appeared even later than the first “hard drive”: it earned on September 12, 1958 at Texas Instruments (Jack Kilby and co-founder of Intel Robert Noyce). By the way, the Nobel Prize in Physics was awarded for its invention in 2000, although there were few physicists as such when creating the microcircuit. It’s just that Kilby and Noyce “only” came up with a technology that made a complete revolution in the electronics industry. Unfortunately, no one has been given yet for the invention of the Nobel hard drive. And they are unlikely to give ...

So, the first hard drive turned out to be 2 years old overfirst chip! (By the way, get ready - exactly 2 years later, on September 12, 2008 the industry will celebrate the half-century anniversary of microcircuits.;)) What was the first magnetic disk drive? Unlike a small microcircuit (a crystal, which then fit on one finger in 1958), the first hard drive was a huge cabinet containing a package of 50 large plates with a diameter of 24 inches (over 60 cm) each.

The disk was called RAMAC (Random Access Method of Accounting and Control) and was developed in an IBM laboratory in the California city of San Jose (later becoming the heart of Silicon Valley). The disc plates were coated with “paint” made of magnetic iron oxide - similar to that used to build the world famous Golden Gate Bridge in San Francisco.

The information capacity of this giant was 5 MB (5 million bytes), which according to current concepts seems like a ridiculous figure, but then it was a High-End Enterprise segment. ;) The plates were mounted on a rotating spindle, and the mechanical bracket (one!) Contained read and write heads and moved up and down on a vertical rod, and the delivery time of the head to the desired magnetic track was less than one second.

As you can see, this concept largely served as a prototype for all subsequent hard drives - rotating hard plates (“pancakes”) with a magnetic coating, concentric recording tracks, quick access to any randomly selected track (see the name RAMAC). Only now for each magnetic surface a separate pair of read-write heads is used, and not common to the entire disk. Method quick access to an arbitrary location of the carrier (random access) made a real revolution in storage devices, because compared with the then prevailing magnetic tapes it allowed to dramatically increase access performance. One such RAMAC weighed almost a ton (971 kg) and was rented out at a price of $ 35,000 per year (then it was equal to the cost of 17 new cars)!

These days, Hitachi Global Storage Technologies, which is the successor to the IBM hard drive business, and has absorbed its wise expertise in hard drive development, is rapidly celebrating the 50th anniversary of this giant at DISKCON USA 2006 in Santa Clara, California.

The next significant step by IBM in this field was the creation of the IBM 3340 drive. This "locker" was already smaller (about a meter high),

and during its appearance in June 1973 was regarded as a scientific “miracle”. At a magnetic recording density of 1.7 Mbps per square inch, it was equipped with small aerodynamic heads (that is, the heads first began to “hover” above a rotating magnetic surface under the influence of aerodynamic forces) and a sealed “box” (“bank”) in which plates with heads. This protected the discs from dust and contaminants and allowed to drastically reduce the working distance between the head and the plate (the height of the "flight"), which led to a significant increase in the density of magnetic recording. IBM 3340 is rightly considered the father of modern hard drives, because it is on these principles that they are built. These drives had a non-replaceable capacity of 30 MB plus the same amount (30 MB) in a removable compartment.

Which gave a reason to call him "Winchester" - by analogy with the famous 30-30 Winchester rifle. Progress, by the way, touched not only the design and recording density, but also the access time, which the developers managed to reduce to 25 milliseconds (compare this with 10-20 ms for modern much more miniature hard drives)!

Later in the same 1973, IBM released the world's first small-sized FHD50 hard drive, based on the principles of IBM 3340: magnetic plates with heads were enclosed in a fully enclosed case, and the heads did not move between the plates.

By the way, the very introduction of the principle of “one magnetic surface - one pair of heads” (that is, the refusal to move the heads betweenplates) happened a little earlier: in 1971, IBM released the 3330-1 Merlin model (named after the mythical medieval wizard), where it applied this principle. The first implementation of servo technology for positioning heads on plates, which later was transformed into IBM's TrueTrack Servo Technology (only IBM has more than 40 patents in it), belongs to the same event. IN modern drives servo tags are located at a distance of about 240 nm from each other and allow you to position the head on the track with an accuracy of 7 nanometers!

It is curious that IBM 3340 drives were intended for collective use, that is, companies could rent space on this hard drive at a price of $ 7.81 per megabyte per month. Therefore, the need for small-sized individual drives was also there.

In 1979, IBM introduced thin-film magnetic head technology. This allowed to increase the magnetic recording density to 7.9 million bits per square inch.

In 1982, Hitachi, Ltd. surprised the world by first releasing a 1GB H-8598 drive, that is, breaking a psychologically significant milestone.

This 1.2 GB drive contained ten 14-inch wafers and two sets of read / write heads in a dual-actuator configuration. With a reading speed of 3 MB per second (for comparison - in desktop hard drives this speed was achieved only about a decade later), the H-8598 model was 87% faster than the products of the previous generation. After 6 years, Hitachi again set a record by releasing a drive with a capacity of 1.89 GB, using 8 disks with a diameter of 9.5 inches. This H-6586 was the first mainframe class drive a person could carry (weighed about 80 kg).

In the 80s of the last century, two other significant events took place for the magnetic storage industry. First, 5.25-inch compact drives were released, which were placed in the appropriate compartments of IBM PC personal computers (the first IBM 5100 Portable Computer was created in 1975, and for some time the products of this line 51x0, and later the famous IBM PC 5150 used cassette drives). And then at the end of the 80s, the American company Conner Peripherals, founded in 1986 by Seagate co-founder Finis Conner, was the first in the world to launch 3.5-inch solenoid drives on the market. This opened a new era in the magnetic storage industry - this form factor has long been considered the main one for hard drives, and larger (in size) hard drives soon ceased to be released as unpromising.

IBM has repeatedly been a pioneer in the production of both miniature laptop hard drives, and the first in the world introduced in 1999 a one-inch hard drive - the famous Microdirve.

It is curious that these ultra-miniature disks used the same plate rotation speed (3600 rpm) as the giant models H-8598 and H-6586, but their capacity and speed turned out to be noticeably higher! And this progress has been achieved in just 10-15 years! If we compare Microdrive with RAMAC, then 323 thousand microdrives will fit in the space of the latter, and their total capacity will be 2,500 terabytes! In 2005, Hitachi GST launched the 10 millionth Microdrive. And the first 2.5-inch hard drive was released by IBM - in 1991 - and bore the name Tanba-1 (the appearance of the Travelstar line). It had a capacity of 63 MB, weighed only 215 grams (3.5-inch drives of the time weighed 3 times more). Although the impact resistance of these wearable babies was useless by today's standards - 60 times less than that of modern counterparts.

By the way, the Hitachi GST is still a confident first place in the world for the production of small-sized hard drives.

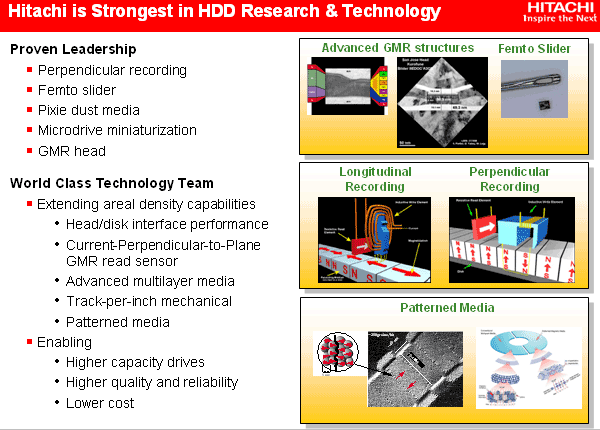

In the mid-90s of the last century, IBM offered at least two more revolutionary technologies that are now used by all manufacturers of hard drives. Firstly, these are magnetic heads with a giant magnetoresistive effect (the so-called GMR heads, which first appeared on Deskstar 16GP discs in 1997), which allowed a sharp increase in recording density (up to 2.7 Gbit / sq. Inch) in the next decade sometimes increase recording density even faster than "according to Moore's law." :) I’ve repeatedly said this, so I won’t repeat myself. And secondly, this is the so-called No-ID sector format (a new way to format magnetic plates), which allows you to increase the density by another 10%. It is also now used by all manufacturers.

Around the same time, the rotation speeds of the magnetic plates of 3.5-inch hard drives began to increase sharply - PC disks in a friendly way “rushed” to 5400, and then to 7200 rpm. (the latter is the standard for a decade), and the Enterprise segment drives spun up to 10,000, and then up to 15,000 rpm. By the way, it’s also not without, although Seagate believes that it was she who made the industry’s first fifteen-thousandth. ;) It is interesting, however, that Hitachi was the first company to increase the rotation speed above 10,000 - up to 12,000 rpm. in its 9.2 GB DK3F-1 model, released in 1998 and breaking performance records. It used new plates of a unique design with a diameter of 2.5 inches (later they became the standard in 15 thousandths).

In 2003, IBM introduced the so-called, the dimensions of which are significantly smaller than before. This allowed the company, which has already become Hitachi GST, to release several new interesting series of discs. By the way, the flight of modern heads above the surface of the plates is proportional in size to the flight of a giant airliner at an altitude of ... 1 millimeter above the ground!

The industry celebrated the 50th anniversary of the hard drive with another remarkable achievement - for the first time in 50 years, drives appeared that use a different principle of magnetic recording than was used in RAMAC. Namely, perpendicular magnetic recording (PMR), when the magnetic domains are oriented not along, but across the thin magnetic film on the surface of the plate. Hitachi GST demonstrated perpendicular magnetic recording in April 2005 on samples with a recording density of 233 Gbps per square meter. inch. The transverse orientation of the magnetic domains in a thin film (although somewhat thicker than for similar models with longitudinal recording) significantly increases the stability of information storage, which is necessary to overcome the consequences of the so-called superparamagnetic effect. True, not Hitachi or Toshiba, but Seagate was the first company to launch in the winter of 2006. But Hitachi equipped, released in the summer of 2006, with the second generation of PMR technology. However, paying tribute to the time, we note that both longitudinal and perpendicular magnetic recording were considered for RAMAC, and then longitudinal was preferred, which determined the development of the industry for half a century! :)

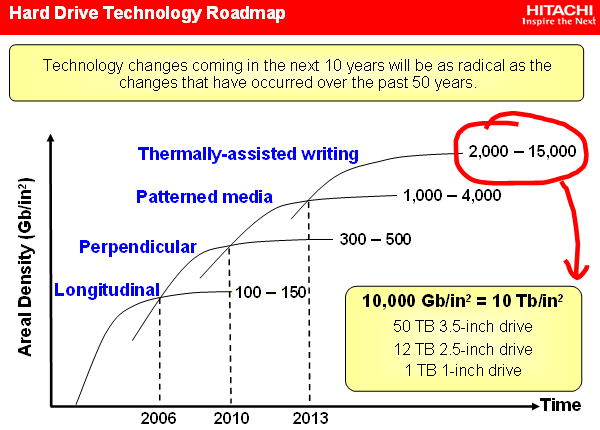

Theoretically, PMR is able to increase the density of magnetic recording to 500 Gbps per square. inch (this is approximately 500 GB for the capacity of a 2.5-inch hard drive). Hitachi connects further plans to increase the density of magnetic recording with the technology of the so-called patterned media (when the film is initially “granulated” to the desired level of recording density), which will increase the storage capacity by an order of magnitude. Next comes the turn of thermally activated magnetic recording with an estimated density limit of up to 15,000 Gbit per sq. inch, which will extend the life of magnetic disk drives of the year until 2020, and even longer.

According to research by scientists at the University of California at Berkeley, some 400,000 terabytes are now created each year. new information only due email. A population of 6.3 billion people annually creates 800 MB of information each, that is, about 5,000,000 terabytes of new data per year, 92% of which are stored on hard drives. This, of course, does not include repeatedly copied and replicated information. Industrial analysts forecast annual growth in hard drive sales from 409 million drives in 2006 to more than 650 million drives in 2010, that is, 12-15% annually.

A large share of this growth will be in the rapidly growing consumer electronics market, that is, soon the hard drive will become an indispensable attribute of typical home electronic devices. And demand, as you know, creates supply. Therefore, there is no reason to doubt the prospects and viability of the magnetic disk drive industry.

The industry has come close to breaking another psychologically important milestone - this time at 1 Terabyte for the capacity of a single drive (3.5-inch form factor). Who will be the first to release such a disc? Closest to this came Seagate, which had already released a 750-gigabyte hard drive, and Hitachi. Both of these manufacturers are already selling hard drives on 160 GB wafers (up to 187.5 with Seagate). However, Hitachi has long mastered the five-plate design, while Seagate is still limited to 4-plate (and far from 250-gigabyte plates so far). Therefore, it was Hitachi that came closest to the terabyte. Moreover, Hitachi GST employees claim that already at the end of this year they will begin shipping the 1 TB drive! When will the official announcement of this model happen? Is it not the 50th anniversary of the Winchester? ..;)

The bad sector on the hard drive is a tiny cluster disk spacefaulty hard sector a disk that does not respond to attempts to read or write.

For example, if you take the usual Dvd disc in hands, it may have scratches, or cracks - which cannot be repaired in any way, or a drop of dirt - which can be carefully removed and the drive returned to work. So with hard drive, regardless of whether it is a magnetic or solid state drive, there are two types of bad sectors - some due to physical damage that cannot be repaired, and others due to software errors that can be fixed.

Types of Bad Sectors

There are two types of bad sectors - physical and logical, or else they can be called "hard" and "soft."

Physical (hard) - A bad sector is a storage cluster on a hard drive that is physically damaged. Maybe your computer (laptop) fell, or because of power surges turned off incorrectly, or maybe the disk is already worn out, there are various physical damageUnfortunately, there is no way to fix it ...

Logical (soft) - A bad sector is a storage cluster on a hard disk that does not work properly. Perhaps the operating system, while reading from the hard drive, for some reason received a failure (failure) and marked this cluster as a bad sector. You can fix such bad sectors by overwriting the disk with zeros, or by performing low-level formatting.

Causes of Bad Hard Disk Sectors

Unfortunately, among the new devices, there is a lot of factory defects and very often the hard drive already comes with bad sectors ... And as it was written earlier, the bad sectors could be caused by drops, or dust could come in. Yes, because of a small speck of dust, the gradual destruction of your hard drive may begin. There are a lot of reasons and, in fact, knowing that your hard drive has sprinkled due to dust will not solve the problem and will not add you to a good mood.

Again, as described above, a sector can be marked as bad, but not so. Viruses used to do this, but besides them there could be some kind of system malfunction, maybe at the moment of recording the electricity will be lost and the system will mark the sector as malfunctioning, and here too there may be many reasons, but some of them are fixable!

Data loss and hard failure drive

If you think the problem is with bad sectors and loss health hard disk is a rarity, then you are very mistaken! No one is safe from this, therefore it is recommended to make copies of important information, for example, in cloud storage, there your data is more protected from loss.

Even if the hard drive using special programs to get to zero - this will not save the information that was on it.

Always back up important information to another medium or to the cloud, and if every time you turn on the computer you see that there is a scan for errors, or if the hard drive makes some sounds, urgently make a copy of the data and begin to diagnose the hard drive, look what's the problem. After all, he may soon say goodbye to you ...

How to check and fix bad sectors

One of the most popular programs for testing hard drive Victoria, it’s free, there’s a bunch of free and paid ones, among which we’ll look at hdd regenerator.

In each operating systemthere is a built-in type hard check drive. You can see it if your computer was turned off incorrectly, or if your hard drive is already working out, then every time you turn it on, it will scan. You can start scanning with your hands - by clicking on any disk with the right mouse button \u003d\u003e go to Properties \u003d\u003e Tools \u003d\u003e in the "Scan disk" field, click "Run scan".

Via standard program You can find out the status of your hard drive, and minor problems will be fixed during the scan.

Verification with Victoria

This program is free and there are no problems downloading it, so if you are trying to try someone for money, you can safely close the site. It can be scanned both in the Windows shell and in Dos (with Live Cd). Scan my recommendations from a boot disk, in which case checking and repairing the disk will be more effective!

Also: do not forget that during the correction or overwriting of bad sectors - all information from the hard disk may disappear! Therefore, if you decide to try to repair your hard - do backup their data. During routine diagnostics, the data will not go anywhere 🙂

As I already said, it is better to scan the hard drive from LiveCD, it can be downloaded on any torrent tracker and it will have all the necessary programs for working with the hard drive.

1.Download the resuscitator: it is not necessary to download on the tfile resource, you can also download on others. But if you are downloading from tfile, then click not on the big blue button "Download torrent", as additional unnecessary software will be installed, but under the button "download torrent" as in the picture

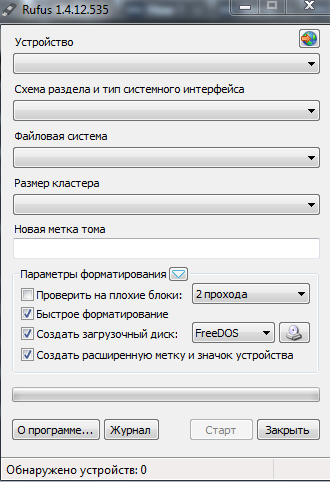

2. We write the downloaded image to a disk or flash drive: I recommend doing this action using the Rufus app.

Download Rufus \u003d\u003e run this utility \u003d\u003e connect a USB flash drive (you can write a disk in the same way go sd card) \u003d\u003e in the “device” field, select a USB flash drive \u003d\u003e on against “Create boot disk»Select \u003d\u003e find the image file of the resuscitator downloaded above (with the extension iso) \u003d\u003e select it, click" OK "and select" Start "in the main window \u003d\u003e wait for the process to finish.

3. When you turn on the computer with go to the BIOS or Boot menu, and;

4. When to boot your computer from the resuscitator - select desired program for working with a hard drive. In this example, Victoria.

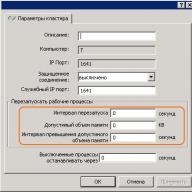

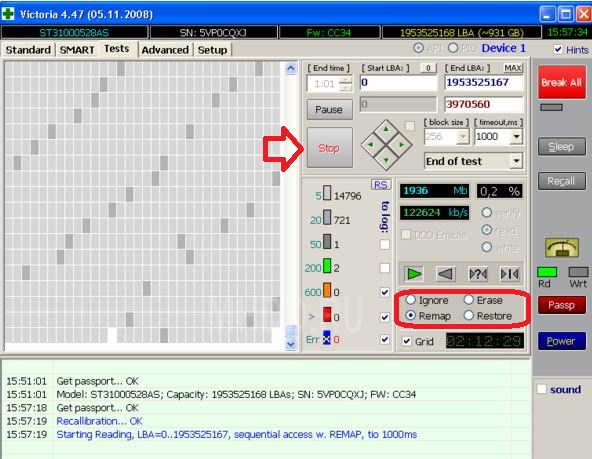

5. In the list of detected drives, select the one you want to test, and go to the Test tab

In the Test tab, we determine the actions during the scan:

Ignore -skip broken sector;

Remap -reassigns bad sectors;

Erase - resets bad sectors, overwrites many times until this sector is replaced from the backup zone.

For normal viewing hard state drive to find out if there are bad sectors, put a tick in Ignore and start scanning. If you want to restore bad sectors - put on Erase and click Start (in this method possible data loss from the hard drive!). You can try using Remap to reassign bad sectors.

This procedure can take from several hours to several days!

In this assembly of the regenerator there are many more programs for working with the hard drive, for example hdd regenerator.With the hdd regenerator program, you can do the same actions as in Victoria, that is, fix bad sectors. During the launch of the program, you will be prompted to select the type of scan:

1. Scan and restore the drive;

2. Scan without recovery;

3. Overwrite bad sectors to victory 🙂

At the end of the scan, you will see statistics, and possibly get some more life time for your hard drive.

If Victoria and hdd regenerator did not help you - in the same assembly you can use the program for low-level formatting HD Low Level Format Tool. The program will format your hard drive, clean the partition table, MBR and each byte of data, and block the path to bad sectors, which may give your hard drive some more time to live.

These methods should be enough for you to understand if you have bad sectors, and also using these methods you will restore the hard drive, or understand that it is time to buy a new one. If there are additions and other ways - write comments! Good luck 🙂