NAS NAS

The volumes of information and data with which modern companies work far exceed the level of a decade or even five years ago. Technical solutions that allow us to efficiently process such a scale of corporate data today are significantly different from schemes operating in the conditions of "household use". For the life of the business, several servers are already needed that simultaneously perform different tasks: terminal, mail, DNS, proxy servers and others, often not combined into a cluster system. With this distribution, there is a problem of online processing and backup of data from various devices. To solve this problem, data storage systems (SHD) are used, which our company offers to choose and buy.

Benefits of Using External Network Storage

Such a data storage system (SHD) for working with data is a comprehensive solution that allows you to centrally store any amount of information, ensuring the reliability of its protection, the speed of processing and complete archiving. Network storage has several more advantages over classic solutions for distributing information between multiple servers. Fault tolerance is achieved by the possibility of both partial and full redundancy of network storage components. External network data storage is characterized by more powerful performance and speed of data transfer, it easily adapts to the business needs of the company, as it has the ability to easily scale and adapt to changes in the volume of information data flows in the company. The data warehouse, unlike standard databases, can be used not only to process transactions, but also to analyze sales dynamics over several years, generate reports in various formats, integrate data from various registration systems.

There are four types of data storage:

- NAS Reliable, inexpensive and easily customizable systems.

- DAS. Schemes with an external trunk, which makes it possible to connect an unlimited number of disks.

- SAN. Well suited for storing mail databases and provide quick access to information.

- Fault tolerant data warehouses. Combined in a cluster scheme and provide the greatest reliability and data transfer rate.

External data warehouses are used to save internal disk space, prevent data loss, ensure the security and availability of content at any time.

Buy online storage for a good price? That way!

If you decide to buy a network storage for your organization, Trinity will provide your business with reliable and powerful data storage systems. In our assortment there are various configurations of storage systems. We are the official representatives of the leading manufacturers of IT equipment in the world market and we are able to quickly complete the data warehouse of any configuration. We offer storage systems from manufacturers such as Dell, HP, Lenovo, EMC, etc.

For each specific company, depending on its requirements and tasks, our experts will help you choose or assemble an individual data storage system that is optimal for its size, budget and existing network infrastructure. The price of the selected data storage system will depend on the configuration, the cost of designing the data warehouse, depending on the tasks, you can check with our specialists.

Our company takes all the work on the analysis of the current state of the technical base, selection of the necessary equipment and installation of equipment. You just need to leave a request to our specialists.

In addition, we provide technical support for the equipment supplied. Our employees are highly qualified engineers, installers, IT-specialists will provide you with qualified assistance at any time. From professional advice to the modernization and development of equipment.

Never before has the problem of file storage been as acute as it is today.

Appearance hard drives with a volume of 3 or even 4TB, Blu-ray discs with a capacity of 25 to 50GB, cloud storage - does not solve the problem. Around us, there are more and more devices generating heavy content around: photos and video cameras, smartphones, HD-TV and video, game consoles, etc. We generate and consume (mainly from the Internet) hundreds and thousands of gigabytes.

This leads to the fact that the average user’s computer stores a huge number of files, hundreds of gigabytes: a photo archive, a collection of favorite movies, games, programs, working documents, etc.

All this must not only be stored, but also protected from failures and other threats.

Pseudo-Solutions

You can equip your computer with capacious hard drive. But in this case the question arises: how and where to archive, say, data from a 3-terabyte drive ?!

You can put two disks and use them in RAID "mirror" mode or just regularly back up from one to the other. This is also not the best option. Suppose a computer is attacked by viruses: most likely, they will infect data on both disks.

You can store important data on optical discs by organizing a home Blu-ray archive. But using it will be extremely inconvenient.

Network Attached Storage - Solution! Partly ...

Network attached storage (NAS) - network file storage. But it can be explained even easier:

Suppose you have two or three computers at home. Most likely, they are connected to the local network (wired or wireless) and to the Internet. Network storage is a specialized computer that integrates into your home network and connects to the Internet.

As a result of this, the NAS can store any of your data, and you can access it from any home PC or laptop. Looking ahead, it should be said that the local network must be modern enough so that you can quickly and easily download through it tens and hundreds of gigabytes between the server and computers. But more on that later.

Where to get a NAS?

The first way: purchase. More or less decent NAS 2 or 4 hard drive can be bought for 500-800 dollars. Such a server will be packed in a small case and ready to work, as they say, “out of the box”.

The first way: purchase. More or less decent NAS 2 or 4 hard drive can be bought for 500-800 dollars. Such a server will be packed in a small case and ready to work, as they say, “out of the box”.

However, PLUS the cost of hard drives is added to these 500-800 dollars! Since NAS is usually sold without them.

Pros: you get a finished device and spend a minimum of time.

The disadvantages of this solution: NAS is like a desktop computer, but it has incomparably less capabilities. It’s actually just network external drive for a lot of money. For quite a lot of money you get a limited, disadvantageous set of features.

My solution: self-assembly!

This is much cheaper than buying a separate NAS, albeit a little longer because you assemble the car yourself). However, you get full home server, which, if desired, can be used in the whole spectrum of its capabilities.

ATTENTION!I strongly discourage building a home server using old computer or old, used parts. Do not forget that the file server is the repository of your data. Do not be stingy to make it as reliable as possible so that one day all your files do not “burn out” along with hard drives, for example, due to a failure in the power supply circuit of the system board ...

So, we decided to build a home file server. A computer, hard disks which is available on your home LAN for use. Accordingly, we need such a computer to be economical in terms of energy consumption, quiet, compact, not to produce much heat and have sufficient performance.

The ideal solution on this basis is a motherboard with a built-in processor and passive cooling, compact size.

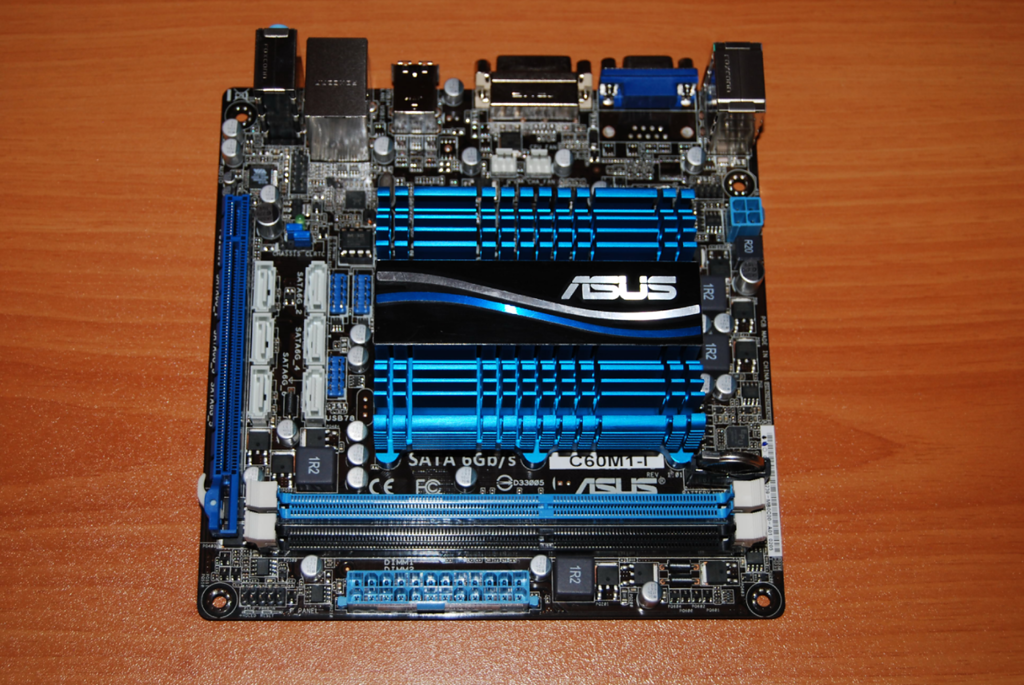

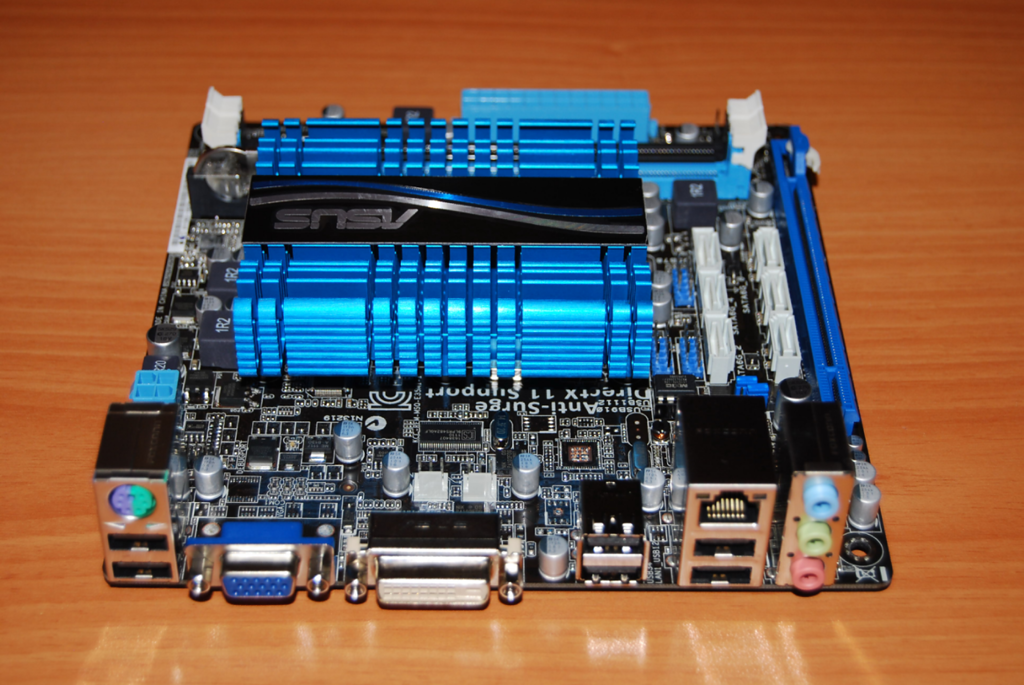

I chose the motherboard ASUS S-60M1-I . It was bought in the online store dostavka.ru:

The package includes a quality user guide, a driver disc, a sticker on the case, 2 SATA cables and a rear panel for the case:

ASUS, as always, has equipped the board very generously. Full board specifications can be found here: http://www.asus.com/Motherboard/C60M1I/#specifications. I will only talk about some important points.

At a cost of just 3300 rubles - It provides 80% of everything that we need for the server.

There is a dual-core processor on board AMD C-60 with integrated graphics chip. The processor has a frequency 1 GHz(can automatically increase to 1.3 GHz). Today it is installed in some netbooks and even laptops. Processor class Intel Atom D2700. But everyone knows that Atom has problems with parallel computing, which often reduces its performance to nothing. But the C-60 is devoid of this drawback, and in addition it is equipped with quite powerful graphics for this class.

Two memory slots available DDR3-1066, with the ability to install up to 8 GB of memory.

The board contains 6 ports SATA 6 Gbps. That allows you to connect as many as 6 disks to the system (!), And not just 4, as in a regular NAS for home.

What is the most important - the board is based on UEFI, but not the usual BIOS. This means that the system will be able to work normally with hard drives over 2.2 TB. She will “see” their entire volume. BIOS motherboards cannot work with hard drives larger than 2.2 GB without special “crutch utilities”. Of course, the use of such utilities is unacceptable if we are talking about the reliability of data storage and servers.

The S-60 is a rather cold processor, so it is cooled using an aluminum radiator alone. This is enough so that even at the time of full load the processor temperature does not rise more than 50-55 degrees. What is the norm.

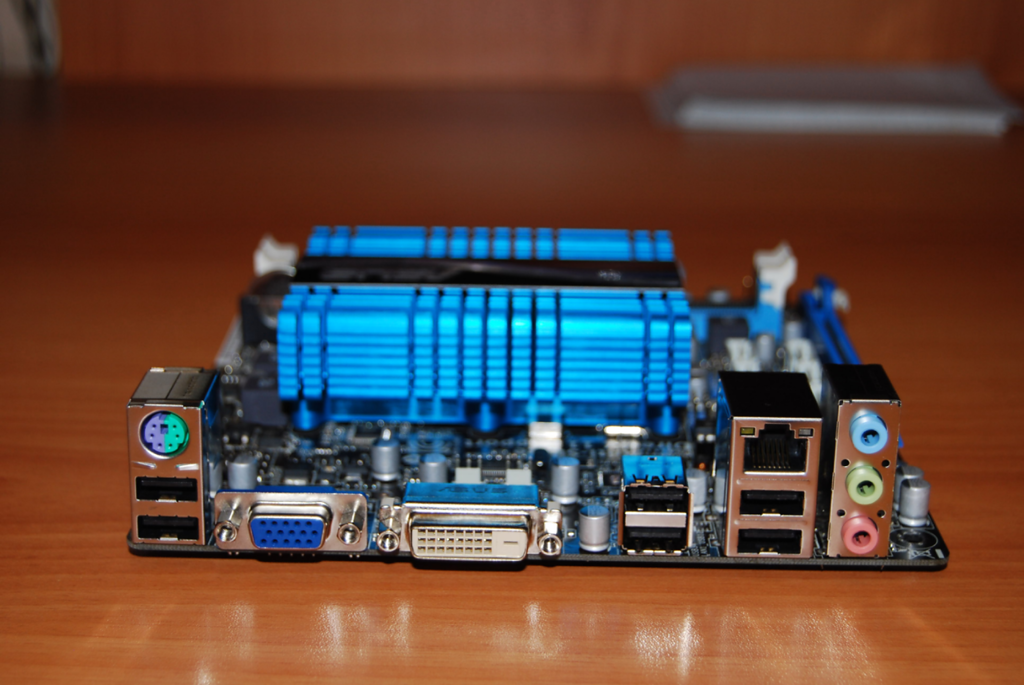

The set of ports is quite standard, only the lack of a new USB 3.0 upsets. And I especially want to answer the presence of a full gigabit network port:

On this board, I installed 2 2 GB DDR3-1333 modules from Patriot:

Windows 7 Ultimate was installed on hDD WD 500GB Green, and for the data I purchased a 3 TB Hitachi-Toshiba HDD:

All this equipment is powered by a 400 W FSP PSU, which, of course, is a margin.

The final step was to assemble all this equipment into a mini-ATX chassis.

Immediately after assembly, I installed on windows computer 7 Ultimate (the installation took about 2 hours, which is normal, given the low processor speed).

After all this, I disconnected the keyboard, mouse and monitor from the computer. In fact, there was only one system unit connected to the LAN via cable.

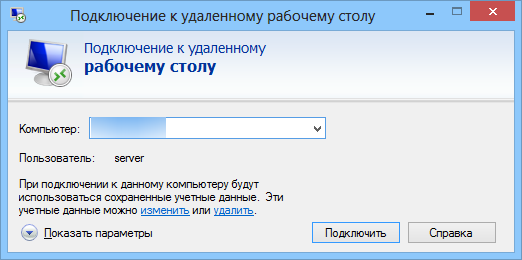

It is enough to remember the local IP of this PC on the network in order to connect to it from any machine through the standard Windows utility “Remote Desktop Connection”:

I intentionally did not install specialized operating systems for organizing file storage, such as FreeNAS. Indeed, in this case, it would not make much sense to assemble a separate PC for these needs. One could just buy a NAS.

But a separate home server, which can be loaded with work at night and left is more interesting. In addition, the familiar interface of Windows 7 is convenient to manage.

Total total cost of a home server WITHOUT hard drives was 6,000 rubles.

Important addition

When using any network storage, network bandwidth is very important. Moreover, even the usual 100 Megabit cable network does not delight when you, say, perform archiving from your computer to your home server. Transferring 100 GB over a 100 Mbps network is already several hours.

What to say about Wi-Fi. Well, if you use Wi-Fi 802.11n - in this case, the network speed is around 100 megabits. And if the standard is 802.11g, where the speed is rarely greater than 30 megabits? It is very, very small.

Ideal when the server interacts over a cable network Gigabit ethernet. In this case, it is really fast.

But I’ll talk about how to create such a network quickly and at minimal cost in a separate article.

How to classify the architecture of storage systems? It seems to me that the relevance of this issue will only grow in the future. How to understand all this variety of offers available on the market? I want to warn you right away that this post is not intended for the lazy or those who do not want to read a lot.

It is possible to classify storage systems quite successfully, similar to how biologists build family ties between species of living organisms. If you want, you can call it the "tree of life" of the world of information storage technologies.

The construction of such trees helps to better understand the world. In particular, you can build a diagram of the origin and development of any types of storage systems, and quickly understand what lies at the basis of any new technology that appears on the market. This allows you to immediately determine the strengths and weaknesses of a solution.

Repositories that allow you to work with information of any kind, architecturally, can be divided into 4 main groups. The main thing is not to get hung up on some things that are confusing. Many people tend to classify platforms on the basis of such “physical” criteria as interconnect (“They all have an internal bus between nodes!”), Or a protocol (“This is a block, or NAS, or multi-protocol system!”), or divided into hardware and software ("This is just software on the server!").

it completely wrong approach to classification. The only true criterion is the software architecture used in a particular solution, since all the basic characteristics of the system depend on it. The remaining components of the storage system depend on which software architecture was chosen by the developers. And from this point of view, “hardware” and “software” systems can only be variations of this or that architecture.

But do not get me wrong, I do not want to say that the difference between them is small. It’s just not fundamental.

And I want to clarify something else before I get down to business. It is common for our nature to ask the questions “And which of this is the best / right?”. There is only one answer to this: "There are better solutions for specific situations or types of workload, but there is no universal ideal solution." Exactly features of the load dictate the choice of architecture, and nothing else.

By the way, I recently participated in a funny conversation on the topic of data centers that work exclusively on flash drives. I am impressed with passion in any manifestations, and it is clear that flash is beyond competition in situations where performance and latency play a decisive role (among many other factors). But I must admit that my interlocutors were wrong. We have one client, many people use his services every day without even realizing it. Can he switch to flash completely? Not.

This is a great customer. And here is another, HUGE, 10 times more. And again, I can’t agree that this client can switch exclusively to flash:

Usually, as a counterargument, they say that in the end the flash will reach such a level of development that it will eclipse magnetic drives. With the caveat that flash will be used paired with deduplication. But not in all cases it is advisable to apply deduplication, as well as compression.

Well, on the one hand, flash memory will become cheaper, to a certain limit. It seemed to me that this would happen somewhat faster, but SSDs with a capacity of 1 TB for $ 550 are already available, this is a big progress. Of course, the developers of traditional hard drives are also not idle. In the 2017-2018 region, competition should intensify, as new technologies will be introduced (most likely, phase shift and carbon nanotubes). But the point is not at all the confrontation between flash and hard drives, or even software and hardware solutions, the main thing is architecture.

It is so important that it is almost impossible to change the architecture of storage without modifying almost everything. That is, in fact, without creating a new system. Therefore, storages are usually created, developed and die within the framework of a single initially selected architecture.

Four types of storage

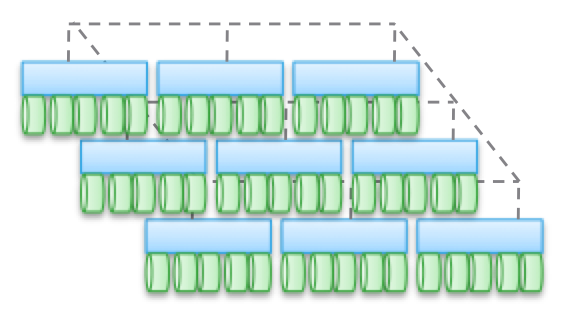

Type 1. Clustered Architecture. They are not designed for sharing memory nodes, in fact, all the data is in one node. One of the features of the architecture is that sometimes devices “intersect” (trespass), even if they are “accessible from several nodes”. Another feature is that you can select a machine, tell it some drives and say "this machine has access to data on these media." The blue in the figure indicates the CPU / memory / input-output system, and the green indicate the media on which the data is stored (flash or magnetic drives).

This type of architecture is characterized by direct and very fast access to the data. There will be slight delays between machines because I / O mirroring and caching are used to ensure high availability. But in general, relatively direct access is provided along the code branch. Software storages are quite simple and have a low latency, so they often have rich functionality, data services are easily added. Not surprisingly, most startups start with such repositories.

Please note that one of the varieties of this type of architecture is PCIe equipment for non-HA servers (Fusion-IO, XtremeCache expansion cards). If we add distributed storage software, coherence, and an HA model to it, then such software storage will correspond to one of the four types of architectures described in this post.

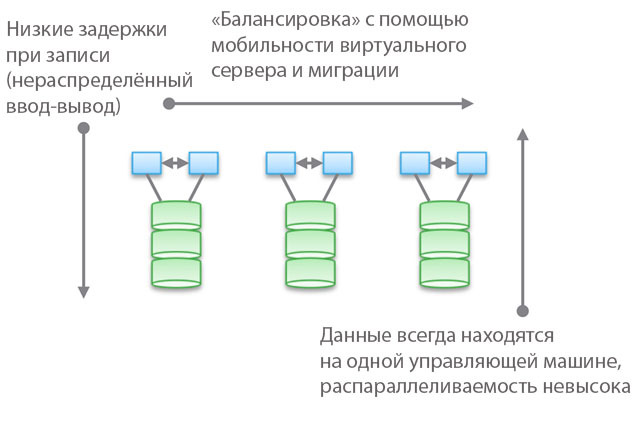

The use of “federated models” helps to improve the horizontal scalability of this type of architecture from a management point of view. These models can use different approaches to increasing data mobility to rebalance between host machines and storage. For example, within VNX, this means “VDM mobility”. But I think that calling it “horizontally scalable architecture” will be a stretch. And, in my experience, most customers share this view. The reason for this is the location of data on one control machine, sometimes - “in a hardware cabinet” (behind an enclosure). They can be moved, but they will always be in one place. On the one hand, this allows you to reduce the number of cycles and recording delay. On the other hand, all your data is served by a single control machine (indirect access from another machine is possible). Unlike the second and third types of architectures, which we will discuss below, balancing and tuning play an important role here.

Objectively speaking, this abstract federal level entails a slight increase in latency because it uses software redirection. This is similar to complicating the code and increasing latency in types of architectures 2 and 3, and partly eliminates the advantages of the first type. As a concrete example, UCS Invicta, a kind of "Silicon Storage Routers". In the case of NetApp FAS 8.x, working in cluster mode, the code is pretty complicated by the introduction of a federated model.

Products using cluster architectures - VNX or NetApp FAS, Pure, Tintri, Nimble, Nexenta and (I think) UCS Invicta / UCS. Some are “hardware” solutions, others are “purely software”, and others are “software in the form of hardware complexes”. All of them are VERY different in terms of data processing (in Pure and UCS Invicta / Whiptail, only flash drives are used). But architecturally, all of the products listed are related. For example, you configure data services exclusively for reserve copy, the software stack becomes the Data Domain, your NAS works as the best backup tool in the world - and this is also the “first type” architecture.

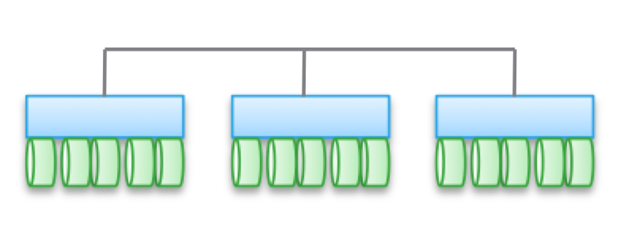

Type 2. Weakly interfaced, horizontally scaled architectures. Nodes do not share memory, but the data itself is for several nodes. This architecture implies the use of more internatal connections to record data, which increases the number of cycles. Although write operations are distributed, they are always coherent.

Note that these architectures do not provide high availability for the node due to copying and data distribution operations. For the same reason, there are always more I / O operations compared to simple cluster architectures. So the performance is slightly lower, despite the small level of recording delay (NVRAM, SSD, etc.).

In some varieties of architectures, nodes are often assembled into subgroups, while the rest are used to manage subgroups (metadata nodes). But the effects described above for “federated models” are manifested here.

Such architectures are quite easy to scale. Since data is stored in several places and can be processed by multiple nodes, these architectures can be great for tasks when distributed reading is required. In addition, they combine well with server / storage software. But it’s best to use similar architectures under transactional loads: due to their distributed nature, you can not use a HA server, and weak conjugation allows you to bypass Ethernet.

This type of architecture is used in products such as EMC ScaleIO and Isilon, VSAN, Nutanix, and Simplivity. As in the case of Type 1, all these solutions are completely different from each other.

Weak connectivity means that often these architectures can significantly increase the number of nodes. But, let me remind you, they DO NOT use memory together, the code of each node works independently of the others. But the devil, as they say, in detail:

- The more recording operations are distributed, the higher the latency and lower the efficiency of the IOP. For example, in Isilon, the distribution level is very high for files, and although with each update the delays are reduced, but still it will never demonstrate the highest performance. But Isilon is extremely strong in terms of parallelization.

- If you reduce the degree of distribution (albeit with a large number of nodes), then the delays may decrease, but at the same time you will reduce your ability to parallelize data reading. For example, VSAN uses the “virtual machine as an object” model, which allows you to run multiple copies. It would seem that a virtual machine should be accessible to a specific host. But, in fact, in VSAN it “shifts” towards the node that stores its data. If you use this solution, you can see for yourself how increasing the number of copies of an object affects latency and I / O operations throughout the system. Hint: more copies \u003d higher load on the system as a whole, and the dependence is non-linear, as you might expect. But for VSAN, this is not a problem due to the advantages of the virtual machine as an object model.

- It is possible to achieve low latency under conditions of high scaling and parallelization during reading, but only if data and writing are precisely separated. a large number copies. This approach is used in ScaleIO. Each volume is divided into a large number of fragments (1 MB by default), which are distributed across all involved nodes. The result is an extremely high read and redistribution speed along with powerful parallelization. The write delay can be less than 1 ms when using the appropriate network infrastructure and SSD / PCIe Flash in the cluster nodes. However, each write operation is performed in two nodes. Of course, unlike VSAN, a virtual machine is not considered an object here. But if considered, then scalability would be worse.

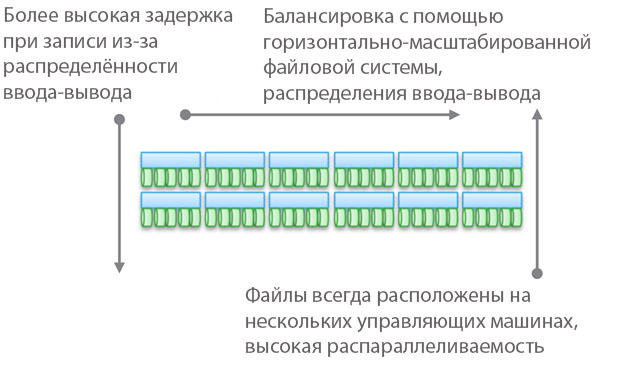

Type 3. Strongly interfaced, horizontally scaled architectures. This uses memory sharing (for caching and some types of metadata). Data is distributed across various nodes. This type of architecture involves the use of a very large number of internode connections for all types of operations.

Sharing memory is the cornerstone of these architectures. Historically, through all control machines symmetrical I / O operations can be carried out (see illustration). This allows you to rebalance the load in case of any malfunctions. This idea was laid in the foundation of products such as Symmetrix, IBM DS, HDS USP and VSP. They provide shared access to the cache, so the I / O procedure can be controlled from any machine.

The top diagram in the illustration reflects the EMC XtremIO architecture. At first glance, it is similar to Type 2, but it is not. In this case, the model of shared distributed metadata implies the use of IB and remote direct memory access so that all nodes have access to the metadata. In addition, each node is an HA pair. As you can see, Isilon and XtremIO are very architecturally different, although this is not so obvious. Yes, both have horizontally scaled architectures, and both use IB for interconnect. But in Isilon, unlike XtremIO, this is done to minimize latency when exchanging data between nodes. It is also possible to use Ethernet in Isilon for communication between nodes (in fact, this is how a virtual machine works on it), but this increases delays in input-output operations. As for XtremIO, remote direct memory access is of great importance for its performance.

By the way, do not be fooled by the presence of two diagrams in the illustration - in fact, they are the same architecturally. In both cases, pairs of HA controllers, shared memory, and very low latency interconnects are used. By the way, VMAX uses a proprietary inter-component bus, but in the future it will be possible to use IB.

Highly coupled architectures are characterized by the high complexity of the program code. This is one of the reasons for their low prevalence. Excessive software complexity also affects the number of data processing services that are added, as this is a more difficult computing task.

The advantages of this type of architecture include fault tolerance (symmetrical I / O in all control machines), as well as, in the case of XtremIO, great opportunities in the field of AFA. Once we are talking about XtremIO again, it is worth mentioning that its architecture implies the distribution of all data processing services. It is also the only AFA solution on the market with a horizontally scaled architecture, although dynamic adding / disabling nodes has not yet been implemented. Among other things, XtremIO uses "natural" deduplication, that is, it is constantly active and "free" in terms of performance. True, all this increases the complexity of system maintenance.

It is important to understand the fundamental difference between Type 2 and Type 3. The more interconnected the architecture, the better and more predictable it is to ensure low latency. On the other hand, within the framework of such an architecture, it is more difficult to add nodes and scale the system. After all, when you use shared access to memory, it is a single, highly conjugated distributed system. The complexity of decisions is growing, and with it the likelihood of errors. Therefore, VMAX can have up to 16 control cars in 8 engines, and XtemIO - up to 8 cars in 4 X-Brick (it will soon increase to 16 cars in 8 blocks). Quadrupling, or even doubling these architectures is an incredibly difficult engineering challenge. For comparison, VSAN can be scaled to “vSphere cluster size” (now 32 nodes), Isilon can contain more than 100 nodes, and ScaleIO allows you to create a system of more than 1000 nodes. Moreover, all this is an architecture of the second type.

Again, I want to emphasize that architecture is implementation independent. The above products use both Ethernet and IB. Some are purely software solutions, others are hardware-software complexes, but at the same time they are united by architectural schemes.

Despite the variety of interconnects, the use of distributed recording plays an important role in all the examples given. This allows you to achieve transactionality and atomicity, but careful monitoring of data integrity is necessary. It is also necessary to solve the problem of the growth of the “failure area”. These two points limit the maximum possible degree of scaling of the described types of architectures.

A small check on how carefully you read all of the above: What type of Cisco UCS Invicta - 1 or 3 belongs to? Physically, it looks like Type 3, but this is a set of C-series USC servers connected via Ethernet, running the Invicta software stack (formerly Whiptail). Hint: look at the architecture, not the specific implementation 🙂

In the case of UCS Invicta, the data is stored in each node (UCS server with flash drives based on MLC). A single non-HA node, which is a separate server, can directly transmit a logical unit number (LUN). If you decide to add more nodes, it is possible that the system scales poorly, like ScaleIO or VSAN. All this brings us to Type 2.

However, the increase in the number of nodes, apparently, is done through configuration and migration to the “Invicta Scaling Appliance”. With this configuration, you have several “Silicon Storage Routers” (SSRs) and an address storage of several hardware nodes. Data is accessed through a single SSR node, but this can be done through another node that works as an HA pair. The data itself is always on the only UCS node in the C series. So what kind of architecture is this? No matter what the solution looks like physically, it is Type 1. The SSR is a cluster (maybe more than 2). In the Scaling Appliance configuration, each UCS server with MLC drives performs a function similar to VNX or NetApp FAS - disk storage. Although not connected via SAS, the architecture is similar.

Type 4. Distributed architectures without sharing any resources. Despite the fact that the data is distributed across different nodes, this is done without any transactionality. Usually, data is stored on one node and live there, and from time to time copies are made on other nodes, for the sake of security. But these copies are not transactional. This is the key difference between this type of architecture and Type 2 and 3.

Communication between non-HA nodes is via Ethernet, as it is cheap and universal. Distribution by nodes is mandatory and from time to time. The “correctness” of the data is not always respected, but the software stack is checked quite often to ensure the correctness of the data used. For some types of load (for example, HDFS), data is distributed so as to be in memory at the same time as the process for which it is required. This property allows us to consider this type of architecture as the most scalable among all four.

But this is far from the only advantage. Such architectures are extremely simple; they are very easy to manage. They are in no way dependent on equipment and can be deployed on the cheapest hardware. These are almost always exclusively software solutions. This type of architecture is just as easy to handle with petabytes of data as terabytes with other data. Objects and non-POSIX file systems are used here, and both are often located on top of the local file system of each ordinary node.

These architectures can be combined with blocks and transactional models of data presentation based on NAS, but this greatly limits their capabilities. No need to create a transactional stack by placing Type 1, 2, or 3 on top of Type 4.

Best of all, these architectures are "revealed" on those tasks for which some restrictions are not peculiar.

Above, I gave an example with a very large client with 200,000 drives. Services such as Dropbox, Syncplicity, iCloud, Facebook, eBay, YouTube and almost all Web 2.0 projects are based on repositories built using the fourth type of architecture. All processed information in Hadoop clusters is also contained in Type 4 storage facilities. In general, in the corporate segment these are not very common architectures, but they quickly gain popularity.

Type 4 is the basis of products such as AWS S3 (by the way, no one outside AWS knows how EBS works, but I’m willing to argue that it is Type 3), Haystack (used on Facebook), Atmos, ViPR, Ceph, Swift ( used in Openstack), HDFS, Centera. Many of the listed products can take a different form, the type of specific implementation is determined using their API. For example, the ViPR object stack can be implemented through the S3, Swift, Atmos, and even HDFS object APIs! And in the future, Centera will also be on this list. For some, this will be obvious, but Atmos and Centera will be used for a long time, however, in the form of APIs, and not specific products. Implementations may change, but the APIs remain unshakable, which is very good for customers.

I want to draw your attention once again to the fact that the “physical embodiment” can confuse you, and you will mistakenly classify Type 4 architectures as Type 2, since they often look the same. At the physical level, a solution may look like ScaleIO, VSAN, or Nutanix, although these will be just Ethernet servers. And the presence or absence of transactionality will help to classify a particular solution correctly.

And now I offer you a second verification test. Let's look at the UCS Invicta architecture. Physically, this product looks like Type 4 (servers connected via Ethernet), but it cannot be scaled architecturally to the appropriate loads, since it is actually Type 1. Moreover, Invicta, like Pure, was developed for AFA.

Please accept my sincere gratitude for your time and attention if you have read up to this place. I re-read the above - it’s amazing that I managed to get married and have children 🙂

Why did I write all this?

In the world of IT storage, they occupy a very important place, a sort of "kingdom of mushrooms." The variety of products on offer is very large, and this only benefits the whole business. But you need to be able to understand all this, do not allow yourself to cloud your mind with marketing slogans and nuances of positioning. For the benefit of both customers and the industry itself, it is necessary to simplify the process of building storage. Therefore, we are working hard to make the ViPR controller an open, free platform.

But the repository itself is hardly exciting. What I mean? Imagine a kind of abstract pyramid, on top of which is a "user". Below are the "applications" designed to serve the "user". Even lower is the “infrastructure” (including SDDC) serving the “applications” and, accordingly, the “user”. And at the very bottom of the "infrastructure" is the repository.

That is, you can imagine the hierarchy in this form: User-\u003e Application / SaaS-\u003e PaaS-\u003e IaaS-\u003e Infrastructure. So: in the end, any application, any PaaS stack must calculate or process some kind of information. And these four types of architectures are designed to work with different types of information, different types of load. In the hierarchy of importance, information immediately follows the user. The purpose of the existence of the application is to enable the user to interact with the information he needs. That is why storage architecture is so important in our world.

This entry was posted in Uncategorized by the author. Bookmark it.SAS, NAS, SAN: a step towards storage networks

Introduction

With the everyday complication of network computer systems and global corporate solutions, the world began to demand technologies that would give an impetus to the revival of corporate information storage systems (storage systems). And now, one unified technology brings unprecedented performance, tremendous scalability and exceptional advantages of total cost of ownership to the world treasury of achievements in the field of sales. The circumstances that have emerged with the advent of the FC-AL (Fiber Channel - Arbitrated Loop) standard and the SAN (Storage Area Network), which develops on its basis, promise a revolution in data-oriented computing technologies.

"The most significant development in storage we" ve seen in 15 years "

Data Communications International, March 21, 1998

The formal definition of SAN in the interpretation of the Storage Network Industry Association (SNIA):

“A network whose main task is to transfer data between computer systems and data storage devices, as well as between the storage systems themselves. The SAN consists of a communications infrastructure that provides physical connectivity, and is also responsible for the management layer, which integrates communications, storage and computer systems, transmitting data safely and securely. ”

SNIA Technical Dictionary, copyright Storage Network Industry Association, 2000

Options for organizing access to storage systems

There are three main options for organizing access to storage systems:

- SAS (Server Attached Storage), storage connected to the server;

- NAS (Network Attached Storage), network connected storage;

- SAN (Storage Area Network), a storage area network.

Let us consider the topologies of the corresponding storage systems and their features.

SAS

Storage system attached to the server. Familiar to everyone, the traditional way to connect the storage system to a high-speed interface in the server, usually to a parallel SCSI interface.

Figure 1. Server Attached Storage

The use of a separate enclosure for the storage system within the SAS topology is optional.

The main advantage of the storage connected to the server in comparison with other options is the low price and high speed from the calculation of one storage for one server. Such a topology is most optimal when using a single server through which access to the data array is organized. But she still has a number of problems that prompted designers to look for other options for organizing access to storage systems.

The features of SAS include:

- Access to data depends on the OS and file system (in general);

- The complexity of organizing systems with high availability;

- Low cost;

- High performance in a single node;

- Reducing the response speed when loading the server that serves the storage.

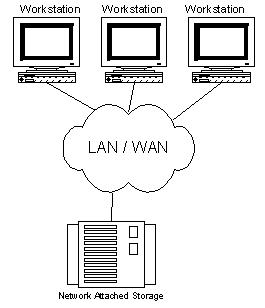

NAS

Storage system connected to the network. This option for organizing access has appeared relatively recently. Its main advantage is the convenience of integrating an additional storage system into existing networks, but by itself it does not bring any radical improvements to the storage architecture. In fact, the NAS is a clean file server, and today you can see many new implementations of storage type NAS based on the thin server technology (Thin Server).

Figure 2. Network Attached Storage.

Features of NAS:

- Dedicated file server;

- Access to data does not depend on the OS and platform;

- Convenience of administration;

- Maximum ease of installation;

- Low scalability;

- Conflict with LAN / WAN traffic.

Storage, built on NAS technology, is ideal for low-cost servers with a minimum set of features.

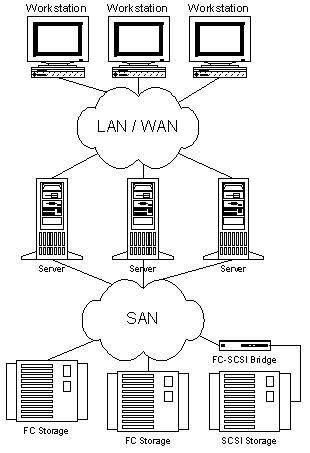

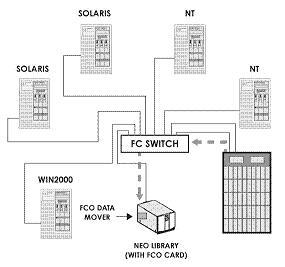

San

Storage networks began to intensively develop and be introduced only in 1999. The basis of the SAN is a network separate from the LAN / WAN, which serves to organize access to the data of servers and workstations engaged in their direct processing. Such a network is created on the basis of the Fiber Channel standard, which gives storage systems the advantages of LAN / WAN technologies and the ability to organize standard platforms for systems with high availability and high query intensity. Almost the only drawback of SAN today is the relatively high cost of components, but the total cost of ownership for enterprise systems built using storage area network technology is quite low.

Figure 3. Storage Area Network.

The main advantages of SAN include almost all of its features:

- Independence of SAN topology from storage systems and servers;

- Convenient centralized management;

- No conflict with LAN / WAN traffic;

- Convenient data backup without loading the local network and servers;

- High speed;

- High scalability;

- High flexibility;

- High availability and fault tolerance.

It should also be noted that this technology is still quite young and in the near future it should undergo many improvements in the field of standardization of management and methods of interaction of SAN subnets. But we can hope that this threatens the pioneers only with additional prospects for the championship.

FC as the basis for building a SAN

Like a LAN, a SAN can be created using various topologies and carriers. When building a SAN, you can use either a parallel SCSI interface or a Fiber Channel or, say, SCI (Scalable Coherent Interface), but Fiber Channel owes its ever-growing popularity to SAN. Specialists with significant experience in developing both channel and network interfaces took part in the design of this interface, and they managed to combine all the important positive features of both technologies in order to get something truly revolutionary new. What exactly?

The main key features channel:

- Low latency

- High speeds

- High reliability

- Point to point topology

- Short distances between nodes

- Platform addiction

- Multipoint Topologies

- Long distances

- High scalability

- Low speeds

- Big delays

- High speeds

- Protocol Independence (Levels 0-3)

- Long distances

- Low latency

- High reliability

- High scalability

- Multipoint Topologies

Traditionally, storage interfaces (what is between the host and the storage devices) have been an obstacle to the growth of speed and the increase in the volume of storage systems. At the same time, applied tasks require a significant increase in hardware capacities, which, in turn, pulls the need to increase the throughput of interfaces for communication with storage systems. It is the problems of building flexible high-speed data access that Fiber Channel helps to solve.

The Fiber Channel standard was finally defined over the past few years (from 1997 to 1999), during which tremendous work was done to coordinate the interaction of manufacturers of various components, and everything was done to ensure that Fiber Channel turned from a purely conceptual technology into real, which received support in the form of installations in laboratories and computer centers. In the year 1997, the first commercial designs of the cornerstone components for building FC-based SANs such as adapters, hubs, switches, and bridges were designed. Thus, since 1998, FC has been used for commercial purposes in the business sector, in production and in large-scale projects for the implementation of critical failure systems.

Fiber Channel is an open industry standard for high-speed serial interface. It provides connection of servers and storage systems at a distance of 10 km (using standard equipment) at a speed of 100 MB / s (at Cebit "2000, product samples were presented that use the new Fiber Channel standard with speeds of 200 MB / s per one ring, and in the laboratory, implementations of the new standard with speeds of 400 MB / s, which is 800 MB / s when using a double ring, are already in operation.) (At the time of publication of the article, a number of manufacturers have already begun to ship network cards and switches to FC 200 MB / s .) Fiber Channel simultaneously o supports a number of standard protocols (including TCP / IP and SCSI-3) when using one physical media, which potentially simplifies the construction of network infrastructure, in addition, it provides opportunities to reduce the cost of installation and maintenance. However, using separate subnets for LAN / WAN and SAN has several advantages and is recommended by default.

One of the most important advantages of Fiber Channel along with high-speed parameters (which, by the way, are not always important for SAN users and can be implemented using other technologies) is the ability to work over long distances and the flexibility of the topology, which has come to a new standard from network technologies. Thus, the concept of building a storage network topology is based on the same principles as traditional networks, usually based on hubs and switches, which help prevent a drop in speed with an increase in the number of nodes and create opportunities for convenient organization of systems without a single point of failure.

For a better understanding of the advantages and features of this interface, we give comparative characteristic FC and Parallel SCSI as a table.

Table 1. Comparison of Fiber Channel and Parallel SCSI Technologies

The Fiber Channel standard assumes the use of a variety of topologies, such as point-to-point, ring or FC-AL hub (Loop or Hub FC-AL), trunk switch (Fabric / Switch).

The point-to-point topology is used to connect a single storage system to the server.

Loop or Hub FC-AL - for connecting multiple device storages to multiple hosts. When organizing a double ring, the speed and fault tolerance of the system increases.

Switches are used to provide maximum performance and fault tolerance for complex, large and branched systems.

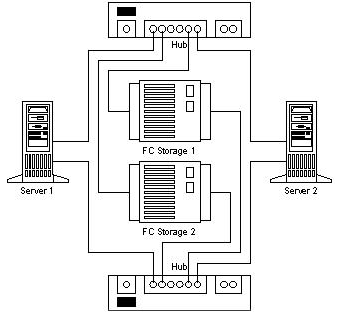

Thanks to network flexibility, SAN has an extremely important feature - a convenient ability to build fault-tolerant systems.

By offering alternative storage solutions and the ability to combine multiple storage redundancy storages, SAN helps protect hardware and software systems from hardware failures. To demonstrate, we give an example of creating a two-single-system without points of failure.

Figure 4. No Single Point of Failure.

Three or more node systems are built by simply adding additional servers to the FC network and connecting them to both hubs / switches).

With FC, the construction of disaster tolerant systems becomes transparent. Network channels for both storages and local network can be laid on the basis of optical fiber (up to 10 km or more using signal amplifiers) as a physical medium for FC, using standard equipment that makes it possible to significantly reduce the cost of such systems.

With the ability to access all SAN components from anywhere, we get an extremely flexible data network. It should be noted that the SAN provides transparency (the ability to see) all components up to disks in storage systems. This feature encouraged component manufacturers to use their significant experience in building management systems for LAN / WAN in order to lay wide monitoring and management capabilities in all SAN components. These features include monitoring and managing individual nodes, component storages, enclosures, network devices, and network substructures.

The SAN management and monitoring system uses such open standards as:

- SCSI command set

- SCSI Enclosure Services (SES)

- SCSI Self Monitoring Analysis and Reporting Technology (S.M.A.R.T.)

- SAF-TE (SCSI Accessed Fault-Tolerant Enclosures)

- Simple Network Management Protocol (SNMP)

- Web-Based Enterprise Management (WBEM)

Systems built using SAN technologies not only provide the administrator with the ability to monitor the development and status of resource resources, but also provide opportunities for monitoring and controlling traffic. Thanks to these resources, SAN management software implements the most effective schemes for planning the volume of sales and balancing the load on system components.

Storage networks integrate seamlessly with existing information infrastructures. Their implementation does not require any changes in the existing LAN and WAN networks, but only expands the capabilities of existing systems, eliminating them from tasks aimed at transferring large amounts of data. Moreover, when integrating and administering the SAN, it is very important that the key elements of the network support hot swapping and installation, with capabilities dynamic configuration. So the administrator can add one or another component or replace it without shutting down the system. And this whole integration process can be visually displayed in a graphical SAN management system.

Having considered the above advantages, we can highlight a number of key points that directly affect one of the main advantages of the Storage Area Network - the total cost of ownership (Total Cost Ownership).

Incredible scalability allows an enterprise that uses SAN to invest in servers and storage as needed. And also to save their investments in already installed equipment when changing technological generations. Each new server It will have the ability to provide high-speed access to the storages and each additional gigabyte of storages will be available to all subnet servers by the administrator’s command.

Excellent resiliency building capabilities can bring direct commercial benefits from minimizing downtime and saving the system in the event of a natural disaster or some other disaster.

The controllability of the components and the transparency of the system provide the ability to centrally administer all resources resources, and this, in turn, significantly reduces the cost of their support, the cost of which, as a rule, is more than 50% of the cost of equipment.

SAN Impact on Applications

In order to make it clear to our readers how practically useful the technologies discussed in this article are, we give a few examples of applied tasks that would be solved inefficiently without using storage networks, would require huge financial investments, or would not be solved at all by standard methods.

Data Backup and Recovery

Using the traditional SCSI interface, the user, when building backup and recovery systems, encounters a number of complex problems that can be very easily solved using SAN and FC technologies.

Thus, the use of storage networks brings the solution to the backup and restore tasks to a new level and provides the ability to backup several times faster than before, without loading the local network and servers with data backup work.

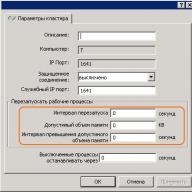

Server Clustering

One of the typical tasks for which SAN is effectively used is server clustering. Since one of the key points in organizing high-speed cluster systems that work with data is access to storage, with the advent of SAN, the construction of multi-node clusters at the hardware level is solved by simply adding a server with a connection to the SAN (this can be done without even shutting down the system, since FC switches support hot-plug). When using a parallel SCSI interface, the connectivity and scalability of which is much worse than that of FC, data-oriented clusters would be difficult to make with more than two nodes. Parallel SCSI switches are very complex and expensive devices, and for FC it is a standard component. To create a cluster that will not have a single point of failure, it is enough to integrate a mirrored SAN (DUAL Path technology) into the system.

In the context of clustering, one of the RAIS technologies (Redundant Array of Inexpensive Servers) seems especially attractive for building powerful scalable Internet commerce systems and other types of tasks with high power requirements. According to Alistair A. Croll, co-founder of Networkshop Inc, the use of RAIS is quite effective: “For example, for $ 12,000-15,000 you can buy about six inexpensive single-dual-processor (Pentium III) Linux / Apache servers. The power, scalability and fault tolerance of such a system will be significantly higher than, for example, a single four-processor server based on Xeon processors, but the cost is the same. ”

Simultaneous access to video and data distribution (Concurrent video streaming, data sharing)

Imagine a task when you need to edit video or just work on huge amounts of data at several (say\u003e 5) stations. Transferring a 100GB file over a local area network will take several minutes, and working together on it will be a very difficult task. When using SAN, each workstation and network server access the file at a speed equivalent to a local high-speed disk. If you need another station / server for data processing, you can add it to the SAN without turning off the network, simply connecting the station to the SAN switch and granting it access rights to the storage. If you are no longer satisfied with the performance of the data subsystem, you can simply add one more storage and using data distribution technology (for example, RAID 0) get twice as fast performance.

SAN core components

Wednesday

Fiber Channel uses copper and optical cables to connect components. Both types of cables can be used simultaneously when building a SAN. Interface conversion is carried out using GBIC (Gigabit Interface Converter) and MIA (Media Interface Adapter). Both types of cable today provide the same data rate. Copper cable is used for short distances (up to 30 meters), optical cable for both short and distances of up to 10 km and more. Use multimode and single-mode optical cables. Multimode cable is used for short distances (up to 2 km). The inner diameter of the multimode fiber optic cable is 62.5 or 50 microns. To ensure a transfer speed of 100 MB / s (200 MB / s in duplex) when using multimode fiber, the cable length should not exceed 200 meters. Singlemode cable is used for long distances. The length of such a cable is limited by the power of the laser used in the signal transmitter. The internal diameter of a single-mode cable is 7 or 9 microns, it provides a single beam path.

Connectors, Adapters

To connect copper cables, DB-9 or HSSD connectors are used. HSSD is considered more reliable, but the DB-9 is used just as often because it is simpler and cheaper. The standard (most common) connector for optical cables is the SC connector, which provides a high-quality, clear connection. For normal connections, multimode SC connectors are used, and for remote connections, single-mode SC connectors are used. Multiport adapters use micro-connectors.

The most common adapters for FC are under the PCI 64 bit bus. Also, many FC adapters are produced under the S-BUS, for specialized use adapters for MCA, EISA, GIO, HIO, PMC, Compact PCI are available. The most popular are single-port, there are two- and four-port cards. On PCI adapters, as a rule, they use DB-9, HSSD, SC connectors. GBIC-based adapters are also common, which come with or without GBIC modules. Fiber Channel adapters are distinguished by the classes that they support, and a variety of features. To understand the differences, we give a comparison table of adapters manufactured by QLogic.

| Fiber Channel Host Bus Adapter Family Chart | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Sanblade | 64 bit | FCAL Publ. Pvt loop | Fl port | Class 3 | F port | Class 2 | Point to point | IP / SCSI | Full duplex | FC tape | PCI 1.0 Hot Plug Spec | Solaris Dynamic Reconfig | VIB | 2gb |

| 2100 Series | 33 & 66MHz PCI | X | X | X | ||||||||||

| 2200 Series | 33 & 66MHz PCI | X | X | X | X | X | X | X | X | X | ||||

| 33MHz PCI | X | X | X | X | X | X | X | X | X | X | ||||

| 25 MHZ Sbus | X | X | X | X | X | X | X | X | X | X | ||||

| 2300 Series | 66 MHZ PCI / 133MHZ PCI-X | X | X | X | X | X | X | X | X | X | X | X | ||

Hubs

Fiber Channel HUBs (hubs) are used to connect nodes to the FC ring (FC Loop) and have a structure similar to Token Ring hubs. Since ring breaking can lead to network interruption, modern FC hubs use PBC-port bypass circuits, which allow you to automatically open / close the ring (connect / disconnect systems connected to the hub). Typically, FC HUBs support up to 10 connections and can stack up to 127 ports per ring. All devices connected to the HUB receive a common bandwidth that they can share among themselves.

Switches

Fiber Channel Switches (switches) have the same functions as familiar to the reader LAN switches. They provide a full-speed, non-blocking connection between nodes. Any node connected to an FC switch receives full (with scalability) bandwidth. With an increase in the number of ports of a switched network, its throughput increases. Switches can be used together with hubs (which are used for sections that do not require a dedicated pass band for each node) to achieve the optimal price / performance ratio. Due to cascading, switches can potentially be used to create FC networks with 2 24 addresses (over 16 million).

Bridges

FC Bridges (bridges or multiplexers) are used to connect devices with parallel SCSI to the FC-based network. They provide translation of SCSI packets between Fiber Channel and Parallel SCSI devices, examples of which are Solid State Disk (SSD) or tape libraries. It should be noted that in lately almost all devices that can be disposed of as part of the SAN, manufacturers are starting to produce with an FC interface built-in for direct connection to storage networks.

Servers and Storage

Despite the fact that servers and storage are far from the least important SAN components, we will not dwell on their description, because we are sure that all our readers are familiar with them.

In the end, I want to add that this article is just the first step to storage networks. To fully understand the topic, the reader should pay a lot of attention to the features of the implementation of components by SAN manufacturers and management software, since without them the Storage Area Network is just a set of elements for switching storage systems that will not bring you the full benefits of implementing a storage network.

Conclusion

Today, the Storage Area Network is a fairly new technology that can soon become widespread among corporate customers. In Europe and the USA, enterprises that have a fairly large fleet of installed storage systems are already starting to switch to storage networks for organizing storage with the best indicator of total cost of ownership.

According to analysts, in 2005 a significant number of mid-level and top-level servers will ship with a pre-installed Fiber Channel interface (this trend can be seen today), and only a parallel SCSI interface will be used for internal disk connection in the servers. Already today, when building storage systems and acquiring middle and top level servers, one should pay attention to this promising technology, especially since today it makes it possible to realize a number of tasks much cheaper than using specialized solutions. Moreover, investing in SAN technology today, you will not lose your investments tomorrow, because the features of Fiber Channel create excellent opportunities for future investment use today.

P.S.

The previous version of the article was written in June 2000, but due to the lack of mass interest in the technology of storage networks, the publication was postponed for the future. This future has come today, and I hope that this article will encourage the reader to realize the need to switch to storage network technology as an advanced technology for building storage systems and organizing access to data.

DAS, SAN, NAS - magic abbreviations, without which not a single article or a single analytical study of storage systems can do. They serve as the designation of the main types of connection between storage systems and computing systems.

Das (direct-attached storage) - device external memorydirectly connected to the main computer and used only by it. The simplest example of DAS is an integrated hard drive. To connect the host with external memory in a typical DAS configuration, SCSI is used, whose commands allow you to select a specific data block on a specified disk or mount a specific cartridge in a tape library.

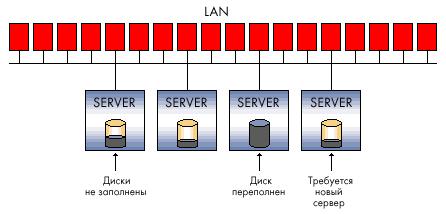

DAS configurations are acceptable for storage, capacity, and reliability requirements. DAS does not provide the ability to share storage capacity between different hosts, and even less the ability to share data. Installing such storage devices is a cheaper option compared to network configurations, however, given the large organizations, this type of storage infrastructure cannot be considered optimal. Many DAS connections mean islands of external memory that are scattered and scattered throughout the company, the excess of which cannot be used by other host computers, which leads to an inefficient waste of storage capacity in general.

In addition, with such a storage organization, there is no way to create a single point of management of external memory, which inevitably complicates the processes of data backup / recovery and creates a serious problem of information protection. As a result, the total cost of ownership of such a storage system can be significantly higher than the more complex at first glance and initially more expensive network configuration.

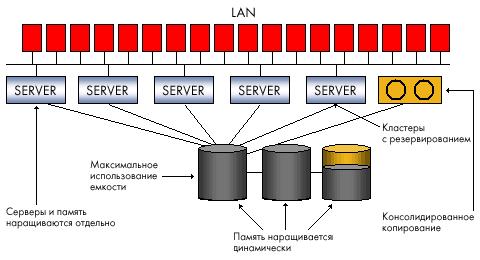

San

Today, speaking of an enterprise-level storage system, we mean network storage. Better known to the general public is the storage network - SAN (storage area network). SAN is a dedicated network of storage devices that allows multiple servers to use the total resource of external memory without the load on the local network.

SAN is independent of the transmission medium, but at the moment the actual standard is Fiber Channel (FC) technology, which provides a data transfer rate of 1-2 Gb / s. Unlike traditional SCSI-based transmission media, providing connectivity of no more than 25 meters, Fiber Channel allows you to work at distances up to 100 km. Fiber Channel network media can be either copper or fiber.

Disk can connect to the storage network rAID arrays, simple disk arrays, (the so-called Just a Bunch of Disks - JBOD), tape or magneto-optical libraries for data backup and archiving. The main components for an organization sAN in addition to the storage devices themselves, there are adapters for connecting servers to the Fiber Channel network (host bus adapter - NVA), network devices to support one or another FC network topology, and specialized software tools for managing the storage network. These software systems can run both on the general-purpose server and on the storage devices themselves, although sometimes some of the functions are transferred to a specialized thin server for managing the storage network (SAN appliance).

The goal of SAN software is primarily to centrally manage the storage network, including configuring, monitoring, controlling and analyzing network components. One of the most important is the function of access control to disk arrays, if heterogeneous servers are stored in the SAN. Storage networks allow multiple servers to simultaneously access multiple disk subsystems, mapping each host to specific disks on a specific disk array. For different operating systems, it is necessary to stratify the disk array into “logical units” (logical units - LUNs), which they will use without conflicts. The allocation of logical areas may be needed to organize access to the same data for a certain pool of servers, for example, servers of the same workgroup. Special software modules are responsible for supporting all these operations.

The attractiveness of storage networks is explained by the advantages that they can give organizations demanding the efficiency of working with large volumes of data. A dedicated storage network offloads the main (local or global) network of computing servers and client workstations, freeing it from data input / output streams.

This factor, as well as the high-speed transmission medium used for SAN, provide increased productivity of data exchange processes with external storage systems. SAN means consolidation of storage systems, creation of a single pool of resources on different media, which will be shared by all computing power, and as a result, the required external memory capacity can be provided with fewer subsystems. In SAN, data is backed up from disk subsystems to tapes outside the local network and therefore becomes more efficient - one tape library can be used to back up data from several disk subsystems. In addition, with the support of the corresponding software, it is possible to implement direct backup in the SAN without the participation of the server, thereby unloading the processor. The ability to deploy servers and memory over long distances meets the needs of improving the reliability of enterprise data warehouses. Consolidated data storage in the SAN scales better because it allows you to increase storage capacity independently of servers and without interrupting their work. Finally, the SAN enables centralized management of a single pool of external memory, which simplifies administration.

Of course, storage networks are an expensive and difficult solution, and despite the fact that all leading suppliers today produce Fiber Channel-based SAN devices, their compatibility is not guaranteed, and choosing the right equipment poses a problem for users. Additional costs will be required for the organization of a dedicated network and the purchase of management software, and the initial cost of the SAN will be higher than the organization of storage using DAS, but the total cost of ownership should be lower.

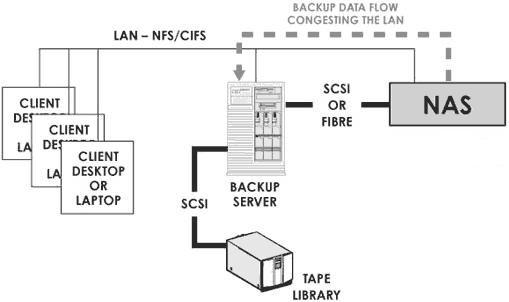

NAS

Unlike SAN, NAS (network attached storage) is not a network, but a network storage device, more precisely, a dedicated file server with a disk subsystem connected to it. Sometimes an optical or tape library may be included in the NAS configuration. The NAS device (NAS appliance) is directly connected to the network and gives hosts access to files on its integrated external memory subsystem. The emergence of dedicated file servers is associated with the development of the NFS network file system by Sun Microsystems in the early 90s, which allowed client computers on the local network to use files on a remote server. Then Microsoft came up with a similar system for the Windows environment - the Common Internet File System. NAS configurations support both of these systems, as well as other IP-based protocols, providing file sharing for client applications.

The NAS device resembles the DAS configuration, but differs fundamentally from it in that it provides access at the file level, not data blocks, and allows all applications on the network to share files on their disks. NAS specifies a file in the file system, the offset in this file (which is represented as a sequence of bytes) and the number of bytes to read or write. A request to a NAS device does not determine the volume or sector on the disk where the file is located. Task operating system NAS devices translate access to a specific file into a request at the data block level. File access and the ability to share information are convenient for applications that should serve many users at the same time, but do not require downloading very large amounts of data for each request. Therefore, it is becoming common practice to use NAS for Internet applications, Web services or CAD, in which hundreds of specialists work on one project.

The NAS option is easy to install and manage. Unlike the storage network, the installation of a NAS device does not require special planning and the cost of additional management software - just connect the file server to the local network. The NAS frees servers on the network from storage management tasks, but does not offload network traffic, since data is exchanged between general-purpose servers and the NAS on the same local network. One or more can be configured on the NAS file systems, each of which is assigned a specific set of volumes on disk. All users of the same file system are allocated some disk space on demand. Thus, NAS provides more efficient organization and use of memory resources compared to DAS, since the directly connected storage subsystem serves only one computing resource, and it may happen that one server on the local network has too much external memory, while another is running out of disk space. But you cannot create a single pool of storage resources from several NAS devices, and therefore an increase in the number of NAS nodes in the network will complicate the management task.

NAS + SAN \u003d?

Which form of storage infrastructure to choose: NAS or SAN? The answer depends on the capabilities and needs of the organization, however, it is basically incorrect to compare or even contrast them, since these two configurations solve different problems. File access and information sharing for applications on heterogeneous server platforms on the local network is the NAS. High-performance block access to databases, storage consolidation, guaranteeing its reliability and efficiency - these are SANs. In life, however, everything is more complicated. NAS and SAN often already coexist or must be simultaneously implemented in the distributed IT infrastructure of the company. This inevitably creates management and storage utilization problems.

Today, manufacturers are looking for ways to integrate both technologies into a single network storage infrastructure that will consolidate data, centralize backups, simplify general administration, scalability and data protection. The convergence of NAS and SAN is one of the most important trends of recent times.

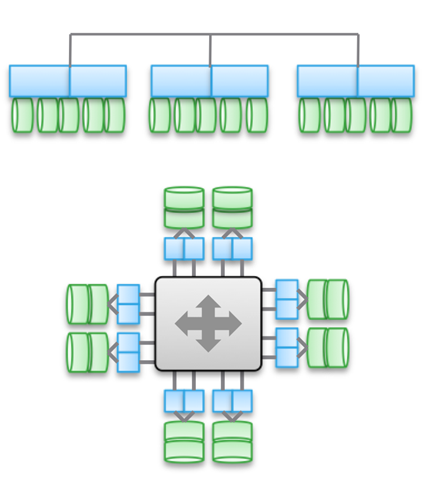

The storage network allows you to create a single pool of memory resources and allocate at the physical level the necessary quota of disk space for each of the hosts connected to the SAN. The NAS server provides data sharing in the file system by applications on different operating platforms, solving the problems of interpreting the file system structure, synchronizing and controlling access to the same data. Therefore, if we want to add to the storage network the ability to separate not only physical disks, but also logical structure file systems, we need an intermediate management server to implement all the functions of network protocols for processing requests at the file level. Hence the general approach to combining SAN and NAS using a NAS device without an integrated disk subsystem, but with the ability to connect storage network components. Such devices, which are called NAS gateways by some manufacturers, and by other NAS head devices, become a kind of buffer between local area network and SAN, providing file-level access to data in the SAN and sharing information across the storage network.

Summary

Building unified network systems that combine the capabilities of SAN and NAS is just one of the steps towards the global integration of enterprise storage systems. Disk arrays connected directly to individual servers no longer satisfy the needs of large organizations with complex distributed IT infrastructures. Today, just storage networks based on high-performance, but specialized Fiber Channel technology are considered not only a breakthrough, but also a source of headache due to the complexity of installation, problems with hardware and software support from different vendors. However, the fact that storage resources must be unified and networked is no longer in doubt. Ways are being sought for optimal consolidation. Hence the activation of manufacturers of solutions that support various options for transferring storage networks to the IP protocol]. Hence the great interest in various implementations of the concept of storage virtualization. Leading players in the storage market not only combine all their products under a common heading (TotalStorage for IBM or SureStore for HP), but formulate their own strategies for creating consolidated, network storage infrastructures and protect corporate data. The key role in these strategies will be played by the idea of \u200b\u200bvirtualization, supported mainly at the level of powerful software solutions for centralized management of distributed storage. Initiatives like StorageTank from IBM, Federated Storage Area Management from HP, E-Infrostructure from EMC, software plays a crucial role.