Information is the driving force of modern business and is currently considered the most valuable strategic asset of any enterprise. The amount of information is growing exponentially with the growth of global networks and the development of e-commerce. To succeed in the information war, you need to have an effective strategy for storing, protecting, sharing and managing the most important digital assets - data - both today and in the near future.

Regardless of whether you are talking about a small, medium or large organization, the right storage solutions are an integral part of your success. Due to the size and value of the data collected, there are a number of problems associated with their storage and availability.

Storing information in a distributed structure entails high costs for the acquisition and collection of data. Often, inefficient use of storage space also occurs. On the other hand, the loss of access and the ability to manage them can lead to a loss of market position, a decrease in prestige and, consequently, even to the collapse of the organization. In complex IT environments, it’s not easy to offer the right decisionthat best suits your needs and your needs. We specialize in storage solutions tailored to the needs of your business, taking into account the type and size of stored data, the systems you have, the type of protection and access speed.

Storage management has become one of the most pressing strategic challenges facing department staff. information technologies. Due to the development of the Internet and fundamental changes in business processes, information is accumulating at an unprecedented rate. In addition to the urgent problem of ensuring the possibility of a constant increase in the volume of stored information, the problem of ensuring the reliability of data storage and constant access to information is no less urgent. For many companies, the data access formula “24 hours a day, 7 days a week, 365 days a year” has become the norm.

Our experience and experience allow us to create safe, efficient and scalable solutions. We offer you the opportunity to talk about how to get a business as an example of opportunities. Consolidation of stored data; Lower cost of ownership of storage infrastructure increased reliability; Scalability to meet emerging needs increase the security level of stored data; more efficient storage management; reduced data maintenance costs; increase productivity; Simplified and centralized management; Facilitating access to stored data. Knowing the importance of the data stored in your organization, you know how important it is to choose the right solution that takes into account your business needs.

In the case of a separate PC, a storage system (SHD) can be understood as a separate internal hard disk or disk system. If we are talking about enterprise storage, then traditionally we can distinguish three storage technologies: Direct Attached Storage (DAS), Network Attach Storage (NAS) and Storage Area Network (SAN).

Direct Attached Storage (DAS)

DAS technology implies a direct (direct) connection of drives to a server or to a PC. In this case, drives (hard drives, tape drives) can be both internal and external. The simplest case of a DAS system is a single disk inside a server or PC. In addition, the organization of an internal RAID array of disks using a RAID controller can also be attributed to the DAS system.

Our solutions guarantee the security, availability, scalability, manageability and protection of your business data. As an experienced partner of many companies, we encourage you to show creative cooperation with us. Strong focus on your data collection and processing needs; proven knowledge of storage solutions; advisory, advisory and practical experience; First-class hardware and software technology training; after-sales support; quality assurance. If you need additional Information About storage solutions in your company.

It is worth noting that, despite the formal possibility of using the term DAS-system in relation to a single disk or to an internal disk array, a DAS-system is usually understood as an external rack or a basket with disks, which can be considered as a standalone storage system (Fig. 1). In addition to independent power supply, such autonomous DAS-systems have a specialized controller (processor) for managing an array of drives. For example, a RAID controller with the ability to organize RAID arrays of various levels can act as such a controller.

Data has become the bloodstream of any organization, and the way it is used is changing rapidly. In the traditional model, data was stored and access was obtained only in certain time. According to analysts, less than 40 percent of the data stored in enterprises is ever used to obtain important business information. New applications that serve, for example, for video analysis, diagnostics or training, generate data that is not stored, but only actively processed in real time.

On the other hand, open source cloud storage solutions can become significantly more expensive due to the fast and unpredictable growth of data sets. Organizations are transforming into digital form, and they emphasize the need to combine data and computing resources.

Fig. 1. An example of a DAS storage system

It should be noted that stand-alone DAS systems can have several external I / O channels, which provides the ability to connect multiple computers to the DAS system at the same time.

The interfaces for connecting drives (internal or external) in DAS technology can be SCSI (Small Computer Systems Interface), SATA, PATA and Fiber Channel. If SCSI, SATA, and PATA are used primarily for connecting internal drives, the Fiber Channel interface is used exclusively for connection external drives and autonomous storage systems. The advantage of the Fiber Channel interface in this case is that it does not have a strict length limit and can be used when the server or PC connected to the DAS system is located at a considerable distance from it. SCSI and SATA interfaces can also be used to connect external storage systems (in this case, the SATA interface is called eSATA), however, these interfaces have a strict limit on the maximum cable length connecting the DAS system and the connected server.

Provides the versatility, simplicity, and fast scalability and cloud connectivity that customers must transform their data into valuable business information. This reduces the time required for day-to-day management by as much as 80 percent.

- This reduces operating costs by 34%.

- Reduces posting level by as much as 70%.

- It takes up 60 percent. less space.

- Used up to 59%. less energy.

The main advantages of DAS-systems include their low cost (in comparison with other storage solutions), ease of deployment and administration, as well as high speed data exchange between the storage system and the server. Actually, precisely because of this, they have gained great popularity in the segment of small offices and small corporate networks. At the same time, DAS systems also have their drawbacks, which include poor controllability and suboptimal utilization of resources, since each DAS system requires a dedicated server.

In addition, new licenses, available in one, three, and five year subscription options, allow customers to choose the right automation plan at the right price. The announced innovations are part of the evolution of the next-generation data center architecture, which allows organizations to take advantage of the best aspects of the public cloud and local data center. The result is a complete, secure, fast and efficient hybrid cloud. Organizations are increasingly under pressure to deliver new services and applications quickly, and many are turning to a continuous integration and continuous delivery model.

Currently, DAS-systems occupy a leading position, but the share of sales of these systems is constantly decreasing. DAS-systems are gradually being replaced by either universal solutions with the possibility of smooth migration from NAS-systems, or systems providing the possibility of their use as DAS-and NAS-and even SAN-systems.

At the same time, digital transformation is creating thousands of new business applications that are becoming the main interface between customers and the company, especially in the field of digital transactions. By paying for the dynamic use of resources along with changing demand, customers can better adjust their future payments to their current level of use. Trademarks of third parties are their property. Computing system \u003d a combination of hardware and software resources that interact with each other to meet user requirements.

DAS systems should be used when it is necessary to increase the disk space of one server and move it out of the box. DAS systems can also be recommended for use with workstations that process large amounts of information (for example, for non-linear video editing stations).

Network Attached Storage (NAS)

NAS systems are network storage systems that are directly connected to the network in the same way as a network print server, router, or any other network device (Fig. 2). In fact, NAS systems are an evolution of file servers: the difference between a traditional file server and a NAS device is about the same as between a hardware network router and a software server based on a dedicated server.

Equipment - all the physical elements in a computer system for receiving, processing, storing and displaying data. Software - all programs running on a computer system and providing its functionality. Hardware and software components allow the user to interact with system resources - file systems, peripheral devices etc.

It consists of a central processor and a memory unit. The information entering the calculation system is divided into 3 categories: - data to be processed; - instructions indicating the processing performed on the data - addition, subtraction, comparison, etc .; - Addresses that allow you to post different data and instructions.

Fig. 2. An example of a NAS storage system

In order to understand the difference between a traditional file server and a NAS device, let's recall that a traditional file server is a dedicated computer (server) that stores information available to network users. Hard drives installed in the server can be used to store information (as a rule, they are installed in special baskets), or DAS devices can be connected to the server. File server administration is performed using the server operating system. This approach to organizing storage systems is currently the most popular in the segment of small local area networks, but it has one significant drawback. The fact is that a universal server (and even in combination with a server operating system) is by no means a cheap solution. At the same time, most functionalityinherent in the universal server, the file server is simply not used. The idea is to create an optimized file server with an optimized operating system and a balanced configuration. It is this concept that the NAS device embodies. In this sense, NAS devices can be considered as “thin” file servers, or, as they are otherwise called, filers.

You can define several levels of data storage.

At the beginning of the era of computing systems, from a structural point of view, memory existed in several forms: delay lines, vacuum cathode tubes, or magnetic cores. From the point of view of storing information when it is not powered, the memory of a computer system is divided into: - volatile memory - non-volatile memory.

Non-volatile memory requires a power source to store information; In the event of a power failure, the memory loses its stored data. Each bit is stored in bistable latch-type circuits. Processor registers are built into the processor and are one of the fastest types of memory. Each registry stores data in 32- or 64-bit words.

In addition to an optimized OS, freed from all functions not related to file system maintenance and data input / output implementation, NAS systems have a file system optimized for access speed. NAS systems are designed in such a way that all their computing power focuses solely on file maintenance and storage operations. The operating system itself is located in flash memory and pre-installed by the manufacturer. Naturally, with the exit new version OS user can independently “reflash” the system. Connecting NAS devices to the network and configuring them is a fairly simple task and can be done by any experienced user, not to mention the system administrator.

As for storage capacity, the cache is much smaller than working memory, but its speed allows you to quickly register the necessary data. Non-volatile memory is a memory that does not lose its contents when power is interrupted. Access to these memories can be obtained: - electrically; - Mechanical.

They have a low working speed and can usually only be read, but some of these memories can also be rewritten using certain procedures. They provide a self-test of hardware components, their initialization and communication with each other, and the loading of the operating system from the storage or network.

Thus, in comparison with traditional file servers, NAS devices are more productive and less expensive. Currently, almost all NAS devices are oriented to use in Ethernet (Fast Ethernet, Gigabit Ethernet) networks based on TCP / IP protocols. Access to NAS devices is made using special file access protocols. The most common file access protocols are CIFS, NFS, and DAFS.

In addition to magneto-optical information carriers, an unconventional type of memory is paper in the form of: - perforated tapes; - Perforated cards. We talked about the first and showed what it is and what it does, but the topic is not exhausted, on the contrary, it will continue to be supplemented by the advantages of this system over the usual hard drive. Among the most important.

It offers the ability to access data on it with any device that we have available at a time, which, obviously, has permission to enter the network to which it is connected. From servers, data may be available on the network. It is very complex and is used only for mission-critical applications, designing, configuring, installing and maintaining the system, which are the responsibility of the solution provider. To work with this system, even staff training is required.

Cifs(Common Internet File System System) is a protocol that provides access to files and services on remote computers (including the Internet) and uses a client-server interaction model. The client creates a request to the server for access to files, the server executes the client's request and returns the result of its work. The CIFS protocol is traditionally used in local networks with Windows to access files. CIFS uses the TCP / IP protocol to transport data. CIFS provides FTP-like functionality ( File transfer Protocol), but provides customers with improved file control. It also allows sharing files between clients using blocking and auto recovery communication with the server in the event of a network failure.

Protocol Nfs (Network File System - a network file system) is traditionally used on UNIX platforms and is a combination of a distributed file system and network protocol. NFS also uses a client-server interaction model. NFS protocol provides access to files on remote host (server) as if they were on the user's computer. NFS uses the TCP / IP protocol to transport data. For the operation of NFS on the Internet, the WebNFS protocol was developed.

Protocol DAFS(Direct Access File System - direct access to file system) is a standard file access protocol that is based on NFS. This protocol allows application tasks to transfer data bypassing the operating system and its buffer space directly to transport resources. The DAFS protocol provides high file I / O speeds and reduces processor load by significantly reducing the number of operations and interrupts that are usually required when processing network protocols.

DAFS was designed with a focus on use in a cluster and server environment for databases and a variety of Internet applications focused on continuous operation. It provides the smallest delay in accessing shared file resources and data, and also supports intelligent mechanisms for restoring system and data health, which makes it attractive for use in NAS systems.

Summarizing the above, NAS systems can be recommended for use in multi-platform networks when required network access to files and quite important factors are the ease of installation of storage administration. A great example is the use of NAS as a file server in the office of a small company.

Storage Area Network (SAN)

Actually, SAN is no longer a separate device, but an integrated solution, which is a specialized network infrastructure for data storage. Storage networks are integrated as separate specialized subnets into a local (LAN) or global (WAN) network.

In essence, SAN networks connect one or more servers (SAN servers) to one or more storage devices. SANs allow any SAN server to access any storage device without loading any other servers or local network. In addition, data exchange between storage devices without the participation of servers is possible. In fact, SAN networks allow a very large number of users to store information in one place (with fast centralized access) and share it. As storage devices, RAID arrays, various libraries (tape, magneto-optical, etc.), as well as JBOD systems (disk arrays that are not integrated into RAID) can be used.

Storage networks began to intensively develop and be introduced only in 1999.

Just as local area networks can, in principle, be built on the basis of various technologies and standards, various technologies can also be used to build SANs. But just as the Ethernet standard (Fast Ethernet, Gigabit Ethernet) has become the de facto standard for local area networks, Fiber Channel (FC) standard dominates in storage networks. Actually, it was the development of the Fiber Channel standard that led to the development of the SAN concept itself. At the same time, it should be noted that the iSCSI standard is becoming increasingly popular, on the basis of which it is also possible to build SAN networks.

Along with speed parameters, one of the most important advantages of Fiber Channel is the ability to work over long distances and the flexibility of the topology. The concept of building a storage network topology is based on the same principles as traditional local networks based on switches and routers, which greatly simplifies the construction of multi-node system configurations.

It is worth noting that both Fiber Channel and copper cables are used for data transmission in the Fiber Channel standard. When organizing access to geographically remote nodes at a distance of 10 km, standard equipment and single-mode optical fiber for signal transmission are used. If the nodes are spaced a greater distance (tens or even hundreds of kilometers), special amplifiers are used.

SAN Network Topology

A typical SAN based on the Fiber Channel standard is shown in Figure 2. 3. The infrastructure of such a SAN network consists of storage devices with a Fiber Channel interface, SAN servers (servers connected to both a local area network via an Ethernet interface and a SAN network via a Fiber Channel interface) and a switching factory (Fiber Channel Fabric) , which is built on the basis of Fiber Channel switches (hubs) and optimized for the transfer of large blocks of data. Network users access to the storage system is implemented through SAN servers. It is important that the traffic inside the SAN network is separated from the IP traffic of the local network, which, of course, can reduce the load on the local network.

Fig. 3. Typical SAN Network Diagram

SAN Benefits

The main advantages of SAN technology include high performance, high level of data availability, excellent scalability and manageability, the ability to consolidate and virtualize data.

Fiber Channel switching factories with a non-blocking architecture allow multiple SAN servers to access storage devices simultaneously.

In the SAN architecture, data can easily be moved from one storage device to another, which optimizes data placement. This is especially important when multiple SAN servers require simultaneous access to the same storage devices. Note that the process of data consolidation is not possible in the case of using other technologies, as, for example, when using DAS devices, that is, storage devices that are directly connected to the servers.

Another feature provided by the SAN architecture is data virtualization. The idea of \u200b\u200bvirtualization is to provide SAN servers access to resources rather than to individual storage devices. That is, servers should not “see” storage devices, but virtual resources. For the practical implementation of virtualization between SAN servers and disk devices, a special virtualization device can be placed, to which storage devices are connected on the one hand, and SAN servers on the other. In addition, many modern FC switches and HBAs provide virtualization capabilities.

The next opportunity provided by SAN networks is the implementation of remote data mirroring. The principle of data mirroring is to duplicate information on several media, which increases the reliability of information storage. An example of the simplest case of data mirroring is the combination of two disks into a RAID array of level 1. In this case, the same information is recorded simultaneously on two disks. The disadvantage of this method can be considered the local location of both disks (as a rule, the disks are in the same basket or rack). Storage networks can overcome this drawback and provide the ability to mirror not just individual storage devices, but the SAN networks themselves, which can be hundreds of kilometers away from each other.

Another advantage of SANs is the ease of organization. reserve copy data. The traditional backup technology used in most local area networks requires a dedicated backup server and, most importantly, a dedicated network bandwidth. In fact, during the backup operation, the server itself becomes inaccessible to users of the local network. Actually, this is why backups are usually performed at night.

The architecture of storage networks allows a fundamentally different approach to the problem of backup. In this case, the Backup server is an integral part of the SAN network and connects directly to the switching factory. In this case, the backup traffic is isolated from the local network traffic.

Equipment used to create SANs

As already noted, the deployment of a SAN network requires storage devices, SAN servers and equipment for building a switching factory. Switching factories include both physical layer devices (cables, connectors), and Interconnect Devices for connecting SAN nodes with each other, translation devices that perform the functions of converting the Fiber Channel (FC) protocol to other protocols, for example SCSI, FCP, FICON, Ethernet, ATM or SONET.

Cables

As already noted, for connecting SAN devices, the Fiber Channel standard allows the use of both fiber optic and copper cables. At the same time, different types of cables can be used in one SAN network. Copper cable is used for short distances (up to 30 m), and fiber optic cable is used for both short and distances of up to 10 km and more. Both multimode (Multimode) and singlemode (Singlemode) fiber-optic cables are used, moreover, multimode is used for distances up to 2 km, and single-mode for large distances.

Coexistence various types cables within the same SAN network is provided through special converters GBIC interfaces (Gigabit Interface Converter) and MIA (Media Interface Adapter).

The Fiber Channel standard provides several possible transmission rates (see table). Note that currently the most common FC-device standards 1, 2 and 4 GFC. At the same time, backward compatibility of higher-speed devices with lower-speed ones is ensured, that is, a 4 GFC standard device automatically supports the connection of GFC standard 1 and 2 devices.

Connection Devices (Interconnect Device)

The Fiber Channel standard allows the use of various network topologies for connecting devices, such as Point-to-Point, Arbitrated Loop (FC-AL), and switched fabric architecture.

The point-to-point topology can be used to connect the server to a dedicated storage system. In this case, the data is not shared with the servers of the SAN network. In fact, this topology is a variant of the DAS system.

To implement a point-to-point topology, at a minimum, you need a server equipped with a Fiber Channel adapter and a storage device with a Fiber Channel interface.

The topology of a shared access ring (FC-AL) implies a device connection scheme in which data is transmitted over a logically closed loop. With the FC-AL ring topology, hubs or Fiber Channel switches can act as connection devices. When using hubs, the bandwidth is shared between all nodes in the ring, while each port on the switch provides protocol bandwidth for each node.

In fig. Figure 4 shows an example of a Fiber Channel shared access ring.

Fig. 4. Sample Fiber Channel Shared Access Ring

The configuration is similar to the physical star and logical ring used in local networks based on Token Ring technology. In addition, as in Token Ring networks, data moves along the ring in one direction, but, unlike Token Ring networks, the device may request the right to transfer data, rather than wait for an empty token from the switch. Split-access Fiber Channel rings can address up to 127 ports, however, as practice shows, typical FC-AL rings contain up to 12 nodes, and after 50 nodes are connected, performance is drastically reduced.

The Fiber Channel switched-fabric topology is implemented on the basis of Fiber Channel switches. In this topology, each device has a logical connection to any other device. In fact, Fiber Channel switches of a connected architecture perform the same functions as traditional Ethernet switches. Recall that, unlike a hub, a switch is a high-speed device that provides a one-to-one connection and processes several simultaneous connections. Any node connected to a Fiber Channel switch receives protocol bandwidth.

In most cases, a mixed topology is used to create large SANs. At the lower level, FC-AL rings are used, connected to low-performance switches, which, in turn, are connected to high-speed switches, providing the highest possible throughput. Several switches can be connected to each other.

Broadcast Devices

Broadcast devices are intermediate devices that convert the Fiber Channel protocol to higher layer protocols. These devices are designed to connect a Fiber Channel network to an external WAN network, a local network, and also to connect various devices and servers to a Fiber Channel network. Such devices include Bridges, Fiber Channel Adapters (HBAs), routers, gateways, and network adapters. The classification of broadcast devices is shown in Figure 5.

Fig. 5. Classification of broadcast devices

The most common translation devices are PCI-based HBAs, which are used to connect servers to a Fiber Channel network. Network adapters allow you to connect local Ethernet networks to Fiber Channel networks. Bridges are used to connect SCSI storage devices to a Fiber Channel network. It should be noted that in lately almost all storage devices that are designed for use in a SAN have an integrated Fiber Channel and do not require bridges.

Data storage devices

SAN networks can use both hard drives and tape drives as storage devices. If we talk about the possible configurations of using hard drives as storage devices in SAN networks, then these can be either JBOD arrays or RAID disk arrays. Traditionally, storage devices for SAN-networks are available in the form of external racks or baskets equipped with a specialized RAID-controller. Unlike NAS or DAS devices, devices for SAN systems are equipped with a Fiber Channel interface. At the same time, the disks themselves can have either a SCSI or SATA interface.

In addition to hard disk storage devices, tape drives and libraries are widely used in SAN networks.

SAN Servers

Servers for SANs differ from regular application servers in only one detail. In addition to the Ethernet network adapter, for server interaction with the local network, they are equipped with an HBA adapter, which allows you to connect them to Fiber Channel-based SAN networks.

Intel Storage Systems

Next, we look at a few specific examples of Intel storage devices. Strictly speaking, Intel does not release complete solutions and is engaged in the development and production of platforms and individual components for building storage systems. Based on these platforms, many companies (including a number of Russian companies) produce finished solutions and sell them under their logos.

Intel Entry Storage System SS4000-E

The Intel Entry Storage System SS4000-E is a NAS device designed for use in small and medium-sized offices and multi-platform local area networks. When using the Intel Entry Storage System SS4000-E, clients based on Windows, Linux, and Macintosh platforms gain shared network access to data. In addition, the Intel Entry Storage System SS4000-E can act as both a DHCP server and a DHCP client.

The Intel Entry Storage System SS4000-E is a compact external rack with the ability to install up to four drives with sATA interface (fig. 6). Thus, the maximum capacity of the system can be 2 TB when using drives with a capacity of 500 GB.

Fig. 6. Intel Entry Storage System SS4000-E

The Intel Entry Storage System SS4000-E uses a SATA RAID controller that supports RAID levels 1, 5, and 10. Because this system is a NAS-device, that is, in fact, a “thin” file server, the data storage system must have a specialized processor, memory and a flashed operating system. The processor used in the Intel Entry Storage System SS4000-E is Intel 80219 with a clock frequency of 400 MHz. In addition, the system is equipped with 256 MB of DDR memory and 32 MB of flash memory for storing the operating system. The operating system used is Linux Kernel 2.6.

To connect to a local network, the system provides a two-channel gigabit network controller. In addition, there are also two USB ports.

The Intel Entry Storage System SS4000-E storage device supports CIFS / SMB, NFS and FTP protocols, and device configuration is implemented using the web interface.

In the case of using Windows-clients (supported by Windows 2000/2003 / XP), it is additionally possible to implement data backup and recovery.

Intel Storage System SSR212CC

Intel Storage System SSR212CC is a universal platform for creating storage systems such as DAS, NAS and SAN. This system is designed in a 2 U case and is designed for installation in a standard 19-inch rack (Fig. 7). The Intel Storage System SSR212CC supports the installation of up to 12 drives with SATA or SATA II (hot swap function is supported), which allows you to increase the system capacity up to 6 TB when using drives with a capacity of 550 GB.

Fig. 7. Intel Storage System SSR212CC

In fact, the Intel Storage System SSR212CC is a full-fledged high-performance server running operating systems Red Hat Enterprise Linux 4.0, Microsoft Windows Storage Server 2003, Microsoft Windows server 2003 Enterprise Edition and Microsoft Windows Server 2003 Standard Edition.

The core of the server is an Intel Xeon processor with a clock speed of 2.8 GHz (FSB frequency 800 MHz, 1 MB L2 cache size). The system supports the use of SDRAM DDR2-400 with ECC up to a maximum of 12 GB (six DIMM slots are provided for installing memory modules).

The Intel Storage System SSR212CC has two Intel RAID Controllers SRCS28Xs with the ability to create RAID levels 0, 1, 10, 5, and 50. In addition, the Intel Storage System SSR212CC has a dual-channel gigabit network controller.

Intel Storage System SSR212MA

System Intel Storage System SSR212MA is a platform for creating storage systems in IP SAN-networks based on iSCSI.

This system is designed in a 2 U case and is designed for installation in a standard 19-inch rack. The Intel Storage System SSR212MA supports the installation of up to 12 SATA drives (hot-swappable), which allows you to increase the system capacity to 6 TB when using drives with a capacity of 550 GB.

In its hardware configuration, the Intel Storage System SSR212MA is no different from the Intel Storage System SSR212CC.

What are storage systems (SHD) and what are they for? What is the difference between iSCSI and FiberChannel? Why did this phrase only in recent years become known to a wide circle of IT-specialists and why are the issues of data storage systems more and more worrying thoughtful minds?

I think many people noticed development trends in the computer world that surrounds us - the transition from an extensive development model to an intensive one. The increase of megahertz processors no longer gives a visible result, and the development of drives does not keep up with the amount of information. If in the case of processors everything is more or less clear - it is enough to assemble multiprocessor systems and / or use several cores in one processor, then in case of issues of storage and processing of information, it is not so easy to get rid of problems. The current panacea for the information epidemic is storage. The name stands for Storage Area Network or Storage System. In any case, it’s special

Main problems solved by storage

So, what tasks is the storage system designed to solve? Consider the typical problems associated with the growing volume of information in any organization. Suppose these are at least a few dozen computers and several geographically spaced offices.

1. Decentralization of information - if earlier all data could be stored literally on one hard disk, now any functional system requires a separate storage - for example, servers email, DBMS, domain and so on. The situation is complicated in the case of distributed offices (branches).

2. The avalanche-like growth of information - often the amount hard drives, which you can install on a specific server, cannot cover the capacity the system needs. Consequently:

The inability to fully protect the stored data is indeed, because it is quite difficult to even backup data that is not only on different servers, but also geographically distributed.

Insufficient information processing speed - communication channels between remote sites still leave much to be desired, but even with a sufficiently “thick” channel it is not always possible to fully use existing networks, for example, IP, for work.

The complexity of the backup - if the data is read and written in small blocks, then it can be unrealistic to make full archiving of information from a remote server through existing channels - it is necessary to transfer the entire amount of data. Local archiving is often impractical for financial reasons — backup systems (tape drives, for example), special software (which can cost a lot of money), and trained and qualified personnel are needed.

3. It is difficult or impossible to predict the required volume disk space when deploying a computer system. Consequently:

There are problems of expanding disk capacities - it is quite difficult to get terabyte capacities in the server, especially if the system is already running on existing small-capacity disks - at a minimum, a system shutdown and inefficient financial investments are required.

Inefficient utilization of resources - sometimes you can’t guess which server the data will grow faster. A critically small amount of disk space can be free in the e-mail server, while another unit will use only 20% of the volume of an expensive disk subsystem (for example, SCSI).

4. Low confidentiality of distributed data - it is impossible to control and restrict access in accordance with the security policy of the enterprise. This applies to both access to data on the channels existing for this (local area network) and physical access to media - for example, theft of hard drives and their destruction are not excluded (in order to complicate the business of the organization). Unqualified actions by users and maintenance personnel can be even more harmful. When a company in each office is forced to solve small local security problems, this does not give the desired result.

5. The complexity of managing distributed information flows - any actions that are aimed at changing data in each branch that contains part of the distributed data creates certain problems, ranging from the complexity of synchronizing various databases, versions of developer files to unnecessary duplication of information.

6. Low economic effect of the introduction of "classic" solutions - with the growth of the information network, large amounts of data and an increasingly distributed structure of the enterprise, financial investments are not so effective and often cannot solve the problems that arise.

7. The high costs of the resources used to maintain the efficiency of the entire enterprise information system - from the need to maintain a large staff of qualified personnel to numerous expensive hardware solutions that are designed to solve the problem of volumes and speeds of access to information, coupled with reliable storage and protection from failures.

In light of the above problems, which sooner or later, completely or partially overtake any dynamically developing company, we will try to describe the storage systems - as they should be. Consider typical connection schemes and types of storage systems.

Megabytes / transactions?

If earlier hard disks were inside the computer (server), now they have become cramped and not very reliable there. The simplest solution (developed long ago and used everywhere) is RAID technology.

images \\ RAID \\ 01.jpg

When organizing RAID in any storage systems, in addition to protecting information, we get several undeniable advantages, one of which is the speed of access to information.

From the point of view of the user or software, the speed is determined not only by the system capacity (MB / s), but also by the number of transactions - that is, the number of I / O operations per unit time (IOPS). Logically, a larger number of disks and those performance improvement techniques that a RAID controller provides (such as caching) contribute to IOPS.

If overall throughput is more important for viewing streaming video or organizing a file server, then for the DBMS and any OLTP (online transaction processing) applications, it is the number of transactions that the system is capable of processing that is critical. And with this option, modern hard drives are not so rosy as with growing volumes and, in part, speeds. All these problems are intended to be solved by the storage system itself.

Protection levels

You need to understand that the basis of all storage systems is the practice of protecting information based on RAID technology - without this, any technically advanced storage system will be useless, because the hard drives in this system are the most unreliable component. Organizing disks in RAID is the "lower link", the first echelon of information protection and increased processing speed.

However, in addition to RAID schemes, there is a lower-level data protection implemented "on top" of technologies and solutions embedded in itself hDD its manufacturer. For example, one of the leading storage manufacturers, EMC, has a methodology for additional data integrity analysis at the drive sector level.

Having dealt with RAID, let's move on to the structure of the storage systems themselves. First of all, storage systems are divided according to the type of host (server) connection interfaces used. External connection interfaces are mainly SCSI or FibreChannel, as well as the fairly young iSCSI standard. Also, do not discount small intelligent stores that can even be connected via USB or FireWire. We will not consider rarer (sometimes simply unsuccessful in one way or another) interfaces, such as IBM SSA or interfaces developed for mainframes - for example, FICON / ESCON. Stand alone NAS storage, connected to the Ethernet network. The word “interface” basically means an external connector, but do not forget that the connector does not determine the communication protocol of the two devices. We will dwell on these features a little lower.

images \\ RAID \\ 02.gif

It stands for Small Computer System Interface (read "tell") - a half-duplex parallel interface. In modern storage systems, it is most often represented by a SCSI connector:

images \\ RAID \\ 03.gif

images \\ RAID \\ 04.gif

And a group of SCSI protocols, and more specifically - SCSI-3 Parallel Interface. The difference between SCSI and the familiar IDE is a larger number of devices per channel, a longer cable length, a higher data transfer rate, as well as “exclusive” features like high voltage differential signaling, command quequing and some others — we won’t go into this issue.

If we talk about the main manufacturers of SCSI components, such as SCSI adapters, RAID controllers with SCSI interface, then any specialist will immediately remember two names - Adaptec and LSI Logic. I think this is enough, there have been no revolutions in this market for a long time and probably is not expected.

FiberChannel Interface

Full duplex serial interface. Most often, in modern equipment it is represented by external optical connectors such as LC or SC (LC - smaller in size):

images \\ RAID \\ 05.jpg

images \\ RAID \\ 06.jpg

... and the FibreChannel Protocols (FCP). There are several FibreChannel device switching schemes:

Point-to-point - point-to-point, direct connection of devices to each other:

images \\ RAID \\ 07.gif

Crosspoint switched - connecting devices to the FibreChannel switch (similar to the Ethernet network implementation on the switches):

images \\ RAID \\ 08.gif

Arbitrated loop - FC-AL, loop with arbitration access - all devices are connected to each other in a ring, the circuit is somewhat reminiscent of Token Ring. A switch can also be used - then the physical topology will be implemented according to the “star” scheme, and the logical one - according to the “loop” (or “ring”) scheme:

images \\ RAID \\ 09.gif

Connection according to the FibreChannel Switched scheme is the most common scheme, in terms of FibreChannel such connection is called Fabric - in Russian there is a tracing-paper from it - “factory”. It should be noted that FibreChannel switches are quite advanced devices, in terms of complexity of filling, they are close to IP-switches of level 3. If the switches are interconnected, then they operate in a single factory, having a pool of settings that are valid for the entire factory at once. Changing some options on one of the switches can lead to a re-switching of the entire factory, not to mention access authorization settings, for example. On the other hand, there are SAN schemes that involve several factories within a single SAN. Thus, a factory can only be called a group of interconnected switches - two or more devices not interconnected, introduced into the SAN to increase fault tolerance, form two or more different factories.

Components that allow combining hosts and storage systems into a single network are commonly referred to as “connectivity”. Connectivity is, of course, duplex connection cables (usually with an LC interface), switches and FibreChannel adapters (HBAs, Host Base Adapters) - that is, expansion cards that, when installed in hosts, allow you to connect the host to the network SAN. HBAs are typically implemented as PCI-X or PCI-Express cards.

images \\ RAID \\ 10.jpg

Do not confuse fiber and fiber - the signal propagation medium can be different. FiberChannel can work on "copper". For example, all FibreChannel hard drives have metal contacts, and the usual switching of devices via copper is not uncommon, they just gradually switch to optical channels as the most promising technology and functional replacement of copper.

ISCSI interface

Usually represented by an external RJ-45 connector for connecting to an Ethernet network and the protocol itself iSCSI (Internet Small Computer System Interface). By the definition of SNIA: “iSCSI is a protocol that is based on TCP / IP and is designed to establish interoperability and manage storage systems, servers and clients.” We’ll dwell on this interface in more detail, if only because each user is able to use iSCSI even on a regular “home” network.

You need to know that iSCSI defines at least the transport protocol for SCSI, which runs on top of TCP, and the technology for encapsulating SCSI commands in an IP-based network. Simply put, iSCSI is a protocol that allows block access to data using SCSI commands sent over a network with a TCP / IP stack. iSCSI appeared as a replacement for FibreChannel and in modern storage systems has several advantages over it - the ability to combine devices over long distances (using existing IP networks), the ability to provide a specified level of QoS (Quality of Service, quality of service), lower cost connectivity. However, the main problem of using iSCSI as a replacement for FibreChannel is the large time delays that occur on the network due to the peculiarities of the TCP / IP stack implementation, which negates one of the important advantages of using storage systems - information access speed and low latency. This is a serious minus.

A small remark about hosts - they can use both regular network cards (then iSCSI stack processing and encapsulation of commands will be done by software), as well as specialized cards supporting technologies similar to TOE (TCP / IP Offload Engines). This technology provides hardware processing of the corresponding part of the iSCSI protocol stack. Software method cheaper, but it loads the server’s central processor more and, in theory, can lead to longer delays than a hardware processor. With the current speed of Ethernet networks at 1 Gbit / s, it can be assumed that iSCSI will work exactly twice as slow as the FibreChannel at a speed of 2 Gbit, but in real use the difference will be even more noticeable.

In addition to those already discussed, we briefly mention a couple of protocols that are more rare and are designed to provide additional services to existing storage area networks (SANs):

FCIP (Fiber Channel over IP) - A tunneling protocol built on TCP / IP and designed to connect geographically dispersed SANs through a standard IP environment. For example, you can combine two SANs into one over the Internet. This is achieved by using an FCIP gateway that is transparent to all devices in the SAN.

iFCP (Internet Fiber Channel Protocol) - A protocol that allows you to combine devices with FC interfaces via IP networks. An important difference from FCIP is that it is possible to unite FC devices through an IP network, which allows for a different pair of connections to have a different level of QoS, which is not possible when tunneling through FCIP.

We briefly examined the physical interfaces, protocols, and switching types for storage systems, without stopping at listing all possible options. Now let's try to imagine what parameters characterize data storage systems?

Main hardware parameters of storage

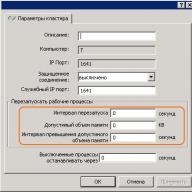

Some of them were listed above - this is the type of external connection interfaces and types of internal drives (hard drives). The next parameter, which makes sense to consider after the two of the above when choosing a disk storage system, is its reliability. Reliability can be assessed not by the banal running hours of failure of any individual components (the fact that this time is approximately equal for all manufacturers), but by the internal architecture. A “regular” storage system often “externally” is a disk shelf (for mounting in a 19-inch cabinet) with hard drives, external interfaces for connecting hosts, several power supplies. Inside, usually everything that provides the storage system is installed - processor units, disk controllers, input-output ports, cache memory and so on. Typically, the rack is controlled from command line or via the web interface, the initial configuration often requires a serial connection. The user can "split" the disks in the system into groups and combine them into RAID (various levels), the resulting disk space It is divided into one or several logical units (LUNs), to which hosts (servers) have access and “see” them as local hard drives. The number of RAID groups, LUNs, the logic of the cache, the availability of LUNs to specific servers and everything else is configured by the system administrator. Typically, storage systems are designed to connect to them not one, but several (up to hundreds, in theory) servers - therefore, such a system should have high performance, a flexible control and monitoring system, and well-thought-out data protection tools. Data protection is provided in many ways, the easiest of which you already know - the combination of drives in RAID. However, the data must also be constantly accessible - after all, stopping one data storage system central to the enterprise can cause significant losses. The more systems store data on the storage system, the more reliable access to the system must be provided - because in the event of an accident, the storage system stops working immediately on all servers that store data there. High rack availability is ensured by complete internal duplication of all system components - access paths to the rack (FibreChannel ports), processor modules, cache memory, power supplies, etc. We will try to explain the principle of 100% redundancy (duplication) with the following figure:

images \\ RAID \\ 11.gif

1. The controller (processor module) of the storage system, including:

* Central processor (or processors) - usually on the system runs special software that acts as the "operating system";

* interfaces for switching with hard disks - in our case, these are boards that provide connection of FibreChannel disks according to the arbitration access loop scheme (FC-AL);

* cache memory;

* FibreChannel external port controllers

2. The external interface of FC; as we see, there are 2 of them for each processor module;

3. Hard disks - the capacity is expanded with additional disk shelves;

4. Cache memory in such a scheme is usually mirrored so as not to lose the data stored there when any module fails.

Regarding the hardware, disk racks can have different interfaces for connecting hosts, different interfaces of hard drives, different connection schemes for additional shelves, which serve to increase the number of disks in the system, as well as other purely “iron parameters”.

Storage Software

Naturally, the hardware power of the storage systems must be somehow managed, and the storage systems themselves are simply obliged to provide a level of service and functionality that is not available in conventional server-client schemes. If you look at the figure “Structural diagram of a data storage system”, it becomes clear that when the server is connected directly to the rack in two ways, they must be connected to the FC ports of various processor modules in order for the server to continue to work if the entire processor module fails immediately. Naturally, to use multipathing, support for this functionality should be provided by hardware and software at all levels involved in data transfer. Of course, full backup without monitoring and alerting does not make sense - therefore, all serious storage systems have such capabilities. For example, notification of any critical events can occur by various means - an e-mail alert, an automatic modem call to the technical support center, a message to a pager (now more relevant than SMS), SNMP mechanisms, and more.

Well, and as we already mentioned, there are powerful controls for all this magnificence. Usually this is a web-based interface, a console, the ability to write scripts and integrate control into external software packages. About the mechanisms that provide high performance storage, we only mention briefly - non-blocking architecture with several internal buses and large quantity hard drives, powerful central processors, specialized control system (OS), a large amount of cache memory, many external I / O interfaces.

The services provided by storage systems are typically determined by software running on the disk rack itself. Almost always, these are complex software packages purchased under separate licenses that are not included in the cost of storage itself. Immediately mention the familiar software for providing multipathing - here it just functions on the hosts, and not on the rack itself.

The next most popular solution is software for creating instant and complete copies of data. Different manufacturers have different names for their software products and mechanisms for creating these copies. To summarize, we can manipulate the words snapshot and clone. A clone is made using the disk rack inside the rack itself - this is a complete internal copy of the data. The scope of application is quite wide - from backup to creating a “test version” of the source data, for example, for risky upgrades in which there is no certainty and which is unsafe to use on current data. Anyone who closely followed all the charms of storage that we were analyzing here would ask - why do you need a data backup inside the rack if it has such high reliability? The answer to this question on the surface is that no one is immune from human errors. The data is stored reliably, but if the operator did something wrong, for example, deleted the desired table in the database, no hardware tricks will save him. Data cloning is usually done at the LUN level. More interesting functionality is provided by the snapshot mechanism. To some extent, we get all the charms of a full internal copy of the data (clone), while not taking up 100% of the amount of data copied inside the rack itself, because such a volume is not always available to us. In fact, snapshot is an instant “snapshot” of data that does not take time and processor resources of storage.

Of course, one cannot fail to mention the data replication software, which is often called mirroring. This is a mechanism for synchronous or asynchronous replication (duplication) of information from one storage system to one or more remote storage systems. Replication is possible through various channels - for example, racks with FibreChannel interfaces can be replicated to another storage system asynchronously, via the Internet and over long distances. This solution provides reliable information storage and protection against disasters.

In addition to all of the above, there are a large number of other software mechanisms for data manipulation ...

DAS & NAS & SAN

After getting acquainted with the data storage systems themselves, the principles of their construction, the capabilities provided by them and the functioning protocols, it's time to try to combine the acquired knowledge into a working scheme. Let's try to consider the types of storage systems and the topology of their connection to a single working infrastructure.

Devices DAS (Direct Attached Storage) - storage systems that connect directly to the server. This includes both the simplest SCSI systems connected to the server's SCSI / RAID controller, and FibreChannel devices connected directly to the server, although they are designed for SANs. In this case, the DAS topology is a degenerate SAN (storage area network):

images \\ RAID \\ 12.gif

In this scheme, one of the servers has access to data stored on the storage system. Clients access data by accessing this server through the network. That is, the server has block access to data on the storage system, and clients already use file access - this concept is very important for understanding. The disadvantages of such a topology are obvious:

* Low reliability - in case of network problems or server crashes, data becomes inaccessible to everyone at once.

* High latency due to the processing of all requests by one server and the transport used (most often - IP).

* High network load, often defining scalability limits by adding clients.

* Poor manageability - the entire capacity is available to one server, which reduces the flexibility of data distribution.

* Low utilization of resources - it is difficult to predict the required data volumes, some DAS devices in an organization may have an excess of capacity (disks), others may lack it - redistribution is often impossible or time-consuming.

Devices NAS (Network Attached Storage) - storage devices connected directly to the network. Unlike other systems, NAS provides file access to data and nothing else. NAS devices are a combination of the storage system and the server to which it is connected. In its simplest form, a regular network server providing file resources is a NAS device:

images \\ RAID \\ 13.gif

All the disadvantages of such a scheme are similar to the DAS topology, with some exceptions. Of the minuses that have been added, we note an increased, and often significantly, cost - however, the cost is proportional to functionality, and here already often there is "something to pay for". NAS devices can be the simplest “boxes” with one ethernet port and two hard drives in RAID1, allowing access to files using only one CIFS (Common Internet File System) protocol to huge systems in which hundreds of hard drives can be installed, and file access provided by a dozen specialized servers inside the NAS system. The number of external Ethernet ports can reach many tens, and the capacity of the stored data is several hundred terabytes (for example, EMC Celerra CNS). Reliability and performance of such models can bypass many SAN midrange devices. Interestingly, NAS devices can be part of a SAN network and do not have their own drives, but only provide file access to data stored on block storage devices. In this case, the NAS assumes the function of a powerful specialized server, and the SAN assumes the storage device, that is, we get the DAS topology, composed of NAS and SAN components.

NAS devices are very good in a heterogeneous environment where you need fast file access to data for many clients at the same time. It also provides excellent storage reliability and system management flexibility coupled with ease of maintenance. We will not dwell on reliability - this aspect of storage is discussed above. As for a heterogeneous environment, access to files within a single NAS system can be obtained via TCP / IP, CIFS, NFS, FTP, TFTP and others, including the ability to work as a NAS iSCSI-target, which ensures operation with various OSs, installed on hosts. As for ease of maintenance and management flexibility, these capabilities are provided by a specialized OS, which is difficult to disable and does not need to be maintained, as well as the ease of delimiting file permissions. For example, it is possible to work in a Windows Active Directory environment with support for the required functionality - it can be LDAP, Kerberos Authentication, Dynamic DNS, ACLs, quotas, Group Policy Objects and SID history. Since access is provided to files, and their names may contain symbols of various languages, many NAS provide support for UTF-8, Unicode encodings. The choice of NAS should be approached even more carefully than to DAS devices, because such equipment may not support the services you need, for example, Encrypting File Systems (EFS) from Microsoft and IPSec. By the way, one can notice that NASs are much less widespread than SAN devices, but the percentage of such systems is still constantly, albeit slowly, growing - mainly due to the crowding out of DAS.

Devices to connect to SAN (Storage Area Network) - devices for connecting to a data storage network. A storage area network (SAN) should not be confused with a local area network - these are different networks. Most often, the SAN is based on the FibreChannel protocol stack and in the simplest case consists of storage systems, switches and servers connected by optical communication channels. In the figure we see a highly reliable infrastructure in which servers are connected simultaneously to the local network (left) and to the storage network (right):

images \\ RAID \\ 14.gif

After a fairly detailed discussion of the devices and their principles of operation, it will be quite easy for us to understand the SAN topology. In the figure, we see a single storage system for the entire infrastructure, to which two servers are connected. Servers have redundant access paths - each has two HBAs (or one dual-port, which reduces fault tolerance). The storage device has 4 ports by which it is connected to 2 switches. Assuming that there are two redundant processor modules inside, it is easy to guess that the best connection scheme is when each switch is connected to both the first and second processor modules. Such a scheme provides access to any data located on the storage system in the event of failure of any processor module, switch, or access path. We have already studied the reliability of storage systems, two switches and two factories further increase the availability of the topology, so if one of the switching units suddenly fails due to a failure or an administrator error, the second will function normally, because these two devices are not interconnected.

The server connection shown is called a high availability connection, although an even larger number of HBAs can be installed in the server if necessary. Physically, each server has only two connections in the SAN, but logically, the storage system is accessible through four paths - each HBA provides access to two connection points on the storage system, separately for each processor module (this feature provides a double connection of the switch to the storage system). In this diagram, the most unreliable device is the server. Two switches provide reliability of the order of 99.99%, but the server may fail for various reasons. If highly reliable operation of the entire system is required, the servers are combined into a cluster, the above scheme does not require any hardware addition to organize such work and is considered the reference scheme of the SAN organization. The simplest case is the servers connected in a single way through one switch to the storage system. However, the storage system with two processor modules must be connected to the switch with at least one channel per module - the remaining ports can be used for direct connection of servers to the storage system, which is sometimes necessary. And do not forget that the SAN can be built not only on the basis of FibreChannel, but also on the basis of iSCSI protocol - at the same time, you can use only standard ethernet devices for switching, which reduces the cost of the system, but has a number of additional disadvantages (specified in the section on iSCSI ) Also interesting is the ability to load servers from the storage system - it is not even necessary to have internal hard drives in the server. Thus, the task of storing any data is finally removed from the servers. In theory, a specialized server can be turned into an ordinary number crusher without any drives, the defining blocks of which are central processors, memory, as well as interfaces to interact with the outside world, such as Ethernet and FibreChannel ports. Some semblance of such devices are modern blade servers.

I would like to note that the devices that can be connected to the SAN are not limited only to disk storage systems - they can be disk libraries, tape libraries (tape drives), devices for storing data on optical disks (CD / DVD, etc.) and many others.

Of the minuses of SAN, we note only the high cost of its components, but the advantages are undeniable:

* High reliability of access to data located on external storage systems. Independence of SAN topology from used storage systems and servers.

* Centralized data storage (reliability, security).

* Convenient centralized management of switching and data.

* Transfer intensive I / O traffic to a separate network, offloading LAN.

* High speed and low latency.

* Scalability and flexibility logical structure San

* Geographically, SAN sizes, unlike classic DASs, are virtually unlimited.

* Ability to quickly distribute resources between servers.

* Ability to build fault-tolerant cluster solutions at no additional cost based on the existing SAN.

* Simple backup scheme - all data is in one place.

* The presence of additional features and services (snapshots, remote replication).

* High security SAN.

Finally

I think we have adequately covered the main range of issues related to modern storage systems. Let's hope that such devices will even more rapidly develop functionally, and the number of data management mechanisms will only grow.

In conclusion, we can say that NAS and SAN solutions are currently experiencing a real boom. The number of manufacturers and the variety of solutions are increasing, and technical literacy of consumers is growing. We can safely assume that in the near future in almost every computing environment, one or another data storage system will appear.

Any data appears before us in the form of information. The meaning of the work of any computing devices is information processing. Recently, the volume of its growth is sometimes frightening, therefore, storage systems and specialized softwarewill undoubtedly be the most sought-after IT-market products in the coming years.