Consider the main linear programming problem (LPP): find nonnegative values of the variables x1, x2, ..., xn satisfying m conditions - equalities

and maximizing the linear function of these variables

For simplicity, we will assume that all conditions (1) are linearly independent (r = m), and we will reason under this assumption.

Any set of nonnegative values x1, x2,…, xn satisfying conditions (1) is called an admissible solution of the OZLP. An optimal solution is that of the admissible solutions that maximizes function (2). It is required to find the optimal solution.

Does this problem always have a solution? No not always.

LPP is undecidable (does not have an optimal solution):

Due to the incompatibility of the system of restrictions. Those. the system has no solution, as shown in Figure 1.

Figure 1 - Inconsistency of the system of restrictions

Due to the unboundedness of the objective function on the set of solutions. In other words, when solving LPP at max, the value of the objective function tends to infinity, and in the case of LPP at min, to minus infinity, as shown in Figure 2.

Figure 2 - Unboundedness of the objective function on the set of solutions

LPP is solvable:

Many solutions are made up of one point. It is also optimal, as shown in Figure 3.

Figure 3 - Many solutions consist of one point

The only optimal solution for the LPP. The straight line corresponding to the objective function at the limiting positions intersects with many solutions at one point, as shown in Figure 4.

Figure 4 - The only optimal solution

The optimal solution to the LPP is not the only one. Vector N is perpendicular to one of the sides of the set of solutions. In this case, any point on the segment AB is optimal, as shown in Figure 5.

Figure 5 - The optimal solution is not the only one

Solving linear programming problems using the simplex method

The simplex method is an algorithm for solving the LP problem, which implements the enumeration of the corner points of the region of feasible solutions in the direction of improving the value of the objective function C. The simplex method is the main one in linear programming.

The use of this method in the diploma project to solve the LP problem is due to the following factors:

The method is universal, applicable to any linear programming problem in the canonical form;

The algorithmic nature of the method makes it possible to successfully program and implement it with the help of technical means.

The extremum of the objective function is always attained at the corner points of the region of feasible solutions. First of all, some feasible initial (support) solution is found, i.e. any corner point of the region of feasible solutions. The procedure of the method allows you to answer the question whether this solution is optimal. If yes, then the problem is solved. If "no", then a transition is made to the adjacent corner point of the feasible solution area, where the value of the objective function improves. The process of enumerating the corner points of the region of feasible solutions is repeated until a point is found, which corresponds to the extremum of the objective function.

Since the number of vertices of the polytope is limited, then in a finite number of steps it is guaranteed to find the optimal value or to establish the fact that the problem is unsolvable.

The system of constraints here is a system of linear equations in which the number of unknowns is greater than the number of equations. If the rank of the system is equal, then it is possible to choose unknowns that are expressed in terms of the remaining unknowns. For definiteness, it is usually assumed that the first consecutive unknowns are selected. These unknowns (variables) are called basic, the rest are free. The number of basic variables is always equal to the number of constraints.

Assigning certain values to free variables, and calculating the values of the basic ones (expressed in terms of free ones), various solutions to the system of constraints are obtained. Of particular interest are the solutions obtained in the case when the free variables are equal to zero. Such solutions are called basic. A basic solution is called an admissible basic solution or a support solution if the values of the variables in it are non-negative. It meets all the restrictions.

Having a system of restrictions, any basic solution of this system is found. If the first found basic solution turned out to be admissible, then it is checked for optimality. If it is not optimal, then the transition to another admissible basic solution is carried out.

The simplex method guarantees that with this new solution the linear form, if it does not reach the optimum, will approach it. With a new admissible basic solution, they do the same until they find a solution that is optimal.

If the first found basic solution turns out to be unacceptable, then using the simplex method, the transition to other basic solutions is carried out until at some step of the solution the basic solution turns out to be admissible, or it can be concluded that the system of constraints is inconsistent.

Thus, the application of the simplex method falls into two stages:

Finding an admissible basic solution to a system of restrictions or establishing the fact of its incompatibility;

Finding the optimal solution in the case of compatibility of the system of constraints.

The algorithm for moving to the next feasible solution is as follows:

In the row of the objective function coefficients, the smallest negative number is selected when finding the maximum. The serial number of the coefficient is. If there is none, then the original basic solution is optimal;

Among the elements of the matrix with the column number (this column is called the leading, or permissive), positive elements are selected. If there are none, then the objective function is unbounded on the range of admissible values of the variables and the problem has no solutions;

Among the selected elements of the leading column of the matrix, the one for which the value of the ratio of the corresponding free term to this element is minimal is selected. This element is called the leading, and the line in which it is located is called the leading;

The basic variable corresponding to the row of the leading element must be converted into the category of free ones, and the free variable corresponding to the column of the leading element must be entered into the number of basic ones. A new solution is constructed containing new numbers of basic variables.

The condition for the optimality of the plan when solving the maximum problem: there are no negative elements among the coefficients of the objective function.

General formulation of the linear programming problem (LPP). Examples of LPP

Linear programming is a branch of mathematics that studies methods for solving extreme problems, which are characterized by a linear relationship between variables and a linear criterion of optimality. A few words about the very term linear programming. It requires correct understanding. In this case, programming is, of course, not writing computer programs. Programming here should be interpreted as planning, the formation of plans, the development of an action program. Mathematical problems of linear programming include the study of specific production and economic situations, which in one form or another are interpreted as problems of the optimal use of limited resources. The range of tasks solved using linear programming methods is quite wide. For example:

- - the problem of the optimal use of resources in production planning;

- - the problem of mixtures (planning the composition of products);

- - the problem of finding the optimal combination of various types of products for storage in warehouses (inventory management or "knapsack problem");

- - transport tasks (analysis of the location of the enterprise, movement of goods). Linear programming is the most developed and widely used branch of mathematical programming (in addition, this includes: integer, dynamic, nonlinear, parametric programming). This is due to the following:

- - mathematical models of a large number of economic problems are linear with respect to the sought variables;

- - this type of problem is currently the most studied. For him, special methods have been developed with the help of which these tasks are solved, and the corresponding computer programs;

- - many problems of linear programming, having been solved, have found wide application;

- - some problems, which in the original formulation are not linear, after a number of additional restrictions and assumptions can become linear or can be reduced to such a form that they can be solved by linear programming methods. The economic and mathematical model of any linear programming problem includes: an objective function, the optimal value of which (maximum or minimum) needs to be found; constraints in the form of a system of linear equations or inequalities; requirement of non-negativity of variables. In general terms, the model is written as follows:

- - objective function:

C1x1 + c2x2 + ... + cnxn> max (min); - restrictions:

a11x1 + a12x2 + ... + a1nxn (? =?) b1,

a21x1 + a22x2 + ... + a2nxn (? =?) b2

am1x1 + am2x2 + ... + amnxn (? =?) bm;

Non-negativity requirement:

In this case, aij, bi, cj () are given constants. The problem is to find the optimal value of function (2.1) subject to constraints (2.2) and (2.3). The system of constraints (2.2) is called the functional constraints of the problem, and constraints (2.3) are called direct. A vector satisfying constraints (2.2) and (2.3) is called a feasible solution (plan) of the linear programming problem. The design in which function (2.1) reaches its maximum (minimum) value is called optimal.

Below are examples of some typical problems solved using linear programming methods. Such tasks have real economic content. Now we will only formulate them in terms of LPP, and we will consider methods for solving such problems below.

1. The problem of the optimal use of resources in production planning. The general meaning of the tasks of this class is as follows. The enterprise produces n different products. Their production requires m different types of resources (raw materials, materials, labor time, etc.). Resources are limited, their reserves in the planning period are, respectively, b1, b2, ..., bm conventional units. There are also known technological coefficients aij, which show how many units of the i-th resource are required to produce a unit of the product of the j-th type (). The profit received by the enterprise from the sale of the product of the j-th type is equal to cj. In the planning period, the values of aij, bi and cj remain constant. It is required to draw up such a production plan, in the implementation of which the profit of the enterprise would be the greatest. Below is a simple example of a task of this class.

The company specializes in the production of hockey sticks and chess sets. Each club generates $ 2 in profit for the company, and $ 4 for each chess set. It takes four hours to make one club in Site A and two hours in Site B. A chess set is made with six hours in Site A, six hours in Site B and one hour in Site C. The available production capacity in Site A is 120 Nm. - hours a day, section B - 72 n-hours and section C - 10 n-hours. How many clubs and chess sets should a company produce daily to maximize profits?

The conditions for problems of this class are often presented in tabular form (see Table 2.1).

Under this condition, we formulate a linear programming problem. Let us denote: x1 - the number of hockey sticks produced daily, x2 - the number of chess sets produced daily. ZLP wording:

4x1 + 6x2? 120,

Let us emphasize that each inequality in the system of functional constraints corresponds in this case to one or another production site, namely: the first to site A, the second to site B, and the third to site C.

The system of variables in the problem of optimizing the structure of sown areas, taking into account crop rotations

The general linear programming problem (LPP) is formulated as follows - find the variables of the problem x 1 , x 2 , ..., x n, which provide the extremum of the objective function

An admissible solution (plan) of a linear programming problem (LPP) is any n-dimensional vector X=(x 1 , x 2 , ..., x n) satisfying the system of equality and inequality constraints. The set of feasible solutions to the problem forms the area of feasible solutions D.

The optimal solution (plan) of a linear programming problem is a feasible solution such that the objective function Z(X) reaches an extreme.

The canonical linear programming problem (LCPP) has the form

(1.2)

(1.2)

It differs from OZLP in that its system of constraints is a system of only equations and all variables are nonnegative.

Bringing OZLP to the canonical form of ZLP:

To replace the original minimization problem with the maximization problem (or vice versa, the maximization problem with the minimization problem), it is enough to multiply the objective function by "-1" and look for the maximum (minimum) of the obtained function;

If there are inequalities among the constraints, then by introducing additional nonnegative variables x n +1 ≥ 0 they convert to equalities:

inequality a i 1 x 1 +…+a in x n ≥ b i is replaced by equality a i 1 x 1 +…+a in x n + x n +1 = b i,

inequality a i 1 x 1 +…+a in x n ≤ b i is replaced by equality a i 1 x 1 +…+a in x n + x n +1 = b i;

If some variable x k has no sign restrictions, then it is replaced (in the objective function and in all restrictions) by the difference between two new non-negative variables: x k = x" k – x” k , Where x" k ≥ 0. x” k ≥ 0.

Graphical method for solving LPP with two unknowns

The LPP with two unknowns has the form:

The method is based on the possibility of graphically displaying the area of feasible solutions and finding the optimal solution among them.

The domain of feasible solutions (ODS) of the problem is a convex polygon and is constructed as the intersection (common part) of the domains of solutions to each of the inequalities of the constraints of the problem.

The solution domain for the inequality a i 1 x 1 +a i 2 x 2 ≤ b i is one of the two half-planes to which the line a i 1 x 1 +a i 2 x 2 = b The i corresponding to this inequality divides the coordinate plane. To determine which of the two half-planes is the region of solutions, it is sufficient to substitute the coordinates of some point that does not lie on the dividing line into the inequality:

If the inequality is true, then the domain of solutions is the half-plane containing this point;

If the inequality is not true, then the domain of solutions is a half-plane that does not contain this point.

Level lines are used to find the optimal solution among the feasible solutions.

The level line is called a straight line. from 1 x 1 +from 2 x 2 = l, Where l= const, on which the objective function of the task takes on a constant value. All level lines are parallel to each other.

Objective function gradient grad Z(X) defines the normal vector C = (c 1 , c 2) level lines. The objective function on the level lines increases if the level lines are moved in the direction of their normal, and decreases in the opposite direction.

The reference line is a level line that has at least one common point with the ODR and with respect to which the ODR is located in one of the half-planes. The IDD of the problem has no more than two support lines.

The optimal solution of the ZLP lies on the support line at the corner point of the ODR polygon. The ZLP has a unique solution if the support line passes through one corner point of the ODR, an infinite set of solutions if the support line passes through the edge of the ODR polygon. The LPP has no solution if the ODR is an empty set (when the system of constraints is inconsistent) and if the ODR is unbounded in the direction of the extremum (the objective function is unbounded).

Algorithm of a graphical method for solving LPP with two unknowns:

Build an SDT.

Construct normal vector C = (c 1 , c 2) and level line from 1 x 1 +from 2 x 2 = 0 passing through the origin and perpendicular to the vector FROM.

Move the level line to the reference line in the direction of the vector FROM in the problem for max, or in the opposite direction - in the problem for min.

If, when the level line moves in the direction of the extremum, the ODR goes to infinity, then the LPP has no solution due to the unboundedness of the objective function.

If the LPP has an optimal solution, then to find it, solve jointly the equations of the straight lines that limit the GDR and have common points with the support line. If the extremum is reached at two corner points, then the LPP has an infinite set of solutions belonging to the edge of the ODR bounded by these corner points. In this case, the coordinates of both corner points are calculated.

Calculate the value of the objective function at the extremum point.

Simplex method for solving LPP

The simplex method is based on the following provisions:

The ODS of a linear programming problem is a convex set with a finite number of corner points;

The optimal solution of the LPP is one of the corner points of the SDT. The corner points of the ODR represent algebraically some basic (support) solutions of the LPP constraint system.

The basic (support) solution of the LPP is called such an admissible solution X 0 =(x 10 , x 20 , ..., x m 0, 0, ... 0), for which the vectors of conditions (columns of coefficients with unknowns in the system of constraints) are linearly independent.

Non-zero coordinates x 10 , x 20 , ..., x m 0 solutions X 0 are called basic variables, the remaining coordinates of the solution X 0 - free variables. The number of nonzero coordinates of the reference solution cannot be greater than the rank r systems of LPP constraints (the number of linearly independent equations in the system of LPP constraints). Further, we assume that the system of LPP constraints consists of linearly independent equations, i.e. r = m.

The meaning of the simplex method consists in a purposeful transition from one reference solution of the LPP to another (i.e., from one corner point of the SDT to another) in the direction of the extremum and consists of a sequence of stages:

Find the initial support solution;

Carry out the transition from one reference solution to another;

Determine the criterion for achieving the optimal solution or conclude that there is no solution.

Execution AlgorithmSimplex method ZLP

The algorithm of the simplex method makes the transition from one reference solution of the LPP to another in the direction of the extremum of the objective function.

Let the LPP be given in the canonical form (1.2) and the condition

b i ≥ 0, i=1,2,…,m, (1.3)

relation (1.3) can always be fulfilled by multiplying the corresponding equation by "-1" in the case of negativity b i. We also assume that the system of equations in the constraints of problem (1.2) is linearly independent and has rank r = m... In this case, the vector of the support solution has m nonzero coordinates.

Let the original problem (1.2), (1.3) be reduced to the form where the basic variables x 1 , x 2 , ..., x m are expressed in terms of free variables x m + 1 , x m + 2 , ..., x n

(1.4)

(1.4)

Based on these ratios, we construct table 1

Table 1.

Table 1 is called a simplex table. All further transformations are associated with changes in the content of this table.

Algorithm withimplex method:

1. In the last line Z simplex tables in the min problem find the smallest positive element (in the max problem - the smallest negative element), not counting the free term. The column corresponding to this element is called permissive.

2. Calculate the ratio of free members to the positive elements of the resolving column (simplex ratio). Find the smallest of these simplex relations, it matches the resolving string.

3. At the intersection of the resolving line and the resolving column is the resolving element.

4. If there are several simplex - relations of the same size, then choose any of them. The same applies to the positive elements of the last row of the simplex table.

5. After finding the resolving element, go to the next table. Unknown variables corresponding to the resolving row and column are swapped. In this case, the basic variable becomes a free variable and vice versa. Simplex - the table is converted as follows (table 2):

table 2

6. The element of table 2, corresponding to the permissive element of table 1, is equal to the reciprocal of the permissive element.

7. Elements of the row of table 2, corresponding to the elements of the permitting line of table 1, are obtained by dividing the corresponding elements of table 1 by the permitting element.

8. The elements of the column of table 2, corresponding to the elements of the permissive column of table 1, are obtained by dividing the corresponding elements of table 1 by the permissive element and are taken with the opposite sign.

9. The rest of the elements are calculated by rectangle rule: mentally draw a rectangle in table 1, one vertex of which coincides with the resolving element (Re), and the other with the element that we are looking for; let us designate the element in the new table 2 through (Ne), and the element standing in the same place in the old table 1 - through (Se). The other two vertices A and B complete the shape to a rectangle. Then the required element Ne from Table 2 is equal to Ne = Se - A * B / Re.

10. Criterion of optimality. As soon as you get a table in which all elements are negative in the last row in the problem for min (in the problem for max all elements are positive), it is considered that the extremum has been found. The optimal value of the objective function is equal to the free term in the line Z, and the optimal solution is determined by the free terms with the basic variables. All free variables are set equal to zero.

11. If in the resolving column all elements are negative, then the problem has no solutions (the minimum is not achieved).

Method of artificial basis for solving the LPP

The algorithm of the simplex method is applicable if some support solution of the LPP is selected, i.e., the original LPP (1.2) is reduced to the form (1.4). The artificial basis method offers a procedure for constructing such a reference solution.

The artificial basis method is based on the introduction of artificial basis variables y 1 , y 2 ,…, y m, with which the system of LPP constraints (2.2)

(1.5)

(1.5)

can be converted to form

(1.6)

(1.6)

Systems (1.5) and (1.6) are equivalent if all y i will be equal to zero. As before, we believe that everything b i ≥ 0. In order to at i were equal to 0, we must transform the problem in such a way that all artificial basic variables y i passed into free variables. Such a transition can be done by the algorithm of the simplex method with respect to the additional objective function

F(y) = y 1 + y 2 + ... + y m = d 0 – (d 1 x 1 +d 2 x 2 +…+d n x n). (2.7)

The original simplex table for this method is

First, the simplex table is transformed relative to the objective function F(y) until the reference solution is obtained. The reference solution is found when the following criterion is met: F(y) = 0 and all artificial variables at i translated into free variables. Then a line is deleted from the simplex table for F(y) and columns for at i and solve the problem for the original objective function Z(x) until an optimal solution is obtained.

A linear programming problem (LPP) is the problem of finding the largest (or smallest) value of a linear function on a convex polyhedral set.

The simplex method is a method for solving a linear programming problem. The essence of the method is to find an initial feasible plan, and in the subsequent improvement of the plan until the maximum (or minimum) value of the objective function is reached in a given convex polyhedral set or clarification of the unsolvability of the problem.

Consider the following linear programming problem in canonical form:

| (1) |

| (2) |

| (3) |

Artificial basis method

As shown above, for a problem written in canonical form, if among the column vectors of the matrix A there is m single and linearly independent, you can specify the baseline directly. However, for many linear programming problems written in canonical form and having support plans, among the column vectors of the matrix A not always there m single and linearly independent. Consider the following problem:

Let it be required to find the maximum of the function

under conditions

where are the first n elements are zeros. Variables ![]() are called artificial. Vectors columns

are called artificial. Vectors columns

| (28) |

form the so-called artificial basis m-dimensional vector space.

Since the extended problem has a basic plan, its solution can be found using the simplex method.

Theorem 4. If in the optimal plan ![]() extended problem (24) - (26) values of artificial variables

extended problem (24) - (26) values of artificial variables ![]() then

then ![]() is the optimal plan for problem (21) - (23).

is the optimal plan for problem (21) - (23).

Thus, if in the found optimal plan of the extended problem, the values of the artificial variables are equal to zero, then the optimal plan of the original problem is obtained. Let us dwell in more detail on finding a solution to the extended problem.

The value of the objective function with the reference plan (27):

Note that F (X) and consist of two independent parts, one of which depends on M and the other is not.

After calculating F (X) and their values, as well as the initial data of the extended problem, are entered into a simplex table, as shown above. The only difference is that this table contains one more row than a regular simplex table. Moreover, in ( m+1) -th row are placed coefficients that do not contain M, and in ( m+2) -th row - coefficients at M.

When moving from one reference plan to another, a vector is introduced into the basis that corresponds to the largest negative number in absolute value ( m+2) strings. An artificial vector excluded from the basis does not make sense to reintroduce it into the basis. When switching to another reference plan, it may happen that none of the artificial vectors from the basis will be excluded. Recalculation of a simplex table when moving from one base plan to another is performed according to the usual rules of the simplex method (see above).

The iterative process is carried out according to m+2 line as long as the elements m+2 lines corresponding to variables ![]() will not become non-negative. In this case, if artificial variables are excluded from the basis, then the found plan of the extended problem corresponds to some basic plan of the original problem.

will not become non-negative. In this case, if artificial variables are excluded from the basis, then the found plan of the extended problem corresponds to some basic plan of the original problem.

m+2 rows, columns x 0 is negative, then the original problem has no solution.

If not all artificial variables are excluded from the basis and the element m+2 rows, columns x 0 equals zero, then the base plan of the original problem is degenerate and the basis contains at least one of the vectors of the artificial basis.

If the original problem contains several unit vectors, then they should be included in the artificial basis.

If during iterations m+2 line no longer contains negative elements, then the iterative process continues with m+1 line, until the optimal plan of the extended problem is found or the undecidability of the problem is revealed.

Thus, the process of finding a solution to the linear programming problem (21) - (23) by the artificial basis method includes the following main stages:

- The extended problem (24) - (26) is constituted.

- Find the basic plan of the extended task.

- Using the simplex method, artificial vectors are excluded from the basis. As a result, the basic plan of the original problem is found or its undecidability is fixed.

- Using the found support plan of the LPP (21) - (23), either the optimal plan of the original problem is found, or its undecidability is established.

To solve linear programming problems online, use a calculator

Components of the mathematical model

Mathematical models are the basis for solving economic problems.

Compilation of a mathematical model includes:Mathematical model task is a set of mathematical relationships that describe the essence of the task.

- selection of task variables

- drawing up a system of restrictions

- choice of objective function

Variables tasks the values X1, X2, Xn are called, which fully characterize the economic process. Usually they are written as a vector: X = (X1, X2, ..., Xn).

A system of restrictions problems are called a set of equations and inequalities that describe the limited resources in the problem under consideration.

Target function the task is called the function of the variables of the task, which characterizes the quality of the task and the extremum of which you want to find.

In general, a linear programming problem can be written as follows:

This notation means the following: find the extremum of the objective function (1) and the corresponding variables X = (X1, X2, ..., Xn), provided that these variables satisfy the system of constraints (2) and non-negativity conditions (3).

A valid solution(plan) of a linear programming problem is any n-dimensional vector X = (X1, X2, ..., Xn) that satisfies the system of constraints and non-negativity conditions.

The set of feasible solutions (plans) of the problem forms range of feasible solutions(ODR).

An example of compiling a mathematical model The problem of using resources (raw materials)The optimal solution(plan) of a linear programming problem is a feasible solution (plan) of the problem at which the objective function reaches an extremum.

Condition: For the manufacture of n types of products, m types of resources are used. Make a mathematical model.

Known:

- bi (i = 1,2,3, ..., m) - reserves of each i-th type of resource;

- aij (i = 1,2,3, ..., m; j = 1,2,3, ..., n) - the costs of each i-th type of resource for the production of a unit of volume of the j-th type of product;

- cj (j = 1,2,3, ..., n) - profit from the sale of a unit of volume of the j-th type of product.

It is required to draw up a plan for the production of products, which provides maximum profit for given constraints on resources (raw materials).

Decision:

We introduce the vector of variables X = (X1, X2, ..., Xn), where xj (j = 1,2, ..., n) is the production volume of the j-th type of product.

The costs of the i-th type of resource for the manufacture of a given volume xj of products are equal to aijxj, therefore, the restriction on the use of resources for the production of all types of products is as follows:

Profit from the sale of the j-th type of product is equal to cjxj, therefore the objective function is equal to:

Answer- The mathematical model is as follows:

The Canonical Form of the Linear Programming Problem

In the general case, a linear programming problem is written so that both equations and inequalities are constraints, and the variables can be both non-negative and arbitrarily variable.

In the case when all constraints are equations and all variables satisfy the nonnegativity condition, the linear programming problem is called canonical.

It can be represented in coordinate, vector and matrix notation.

The canonical linear programming problem in coordinate notation has the form:

The canonical linear programming problem in matrix notation has the form:

- A is the matrix of coefficients of the system of equations

- X - matrix-column of task variables

- Ao - matrix-column of the right sides of the system of restrictions

Often used are linear programming problems, called symmetric, which in matrix notation have the form:

Reducing the general linear programming problem to the canonical form

In most methods for solving linear programming problems, it is assumed that the system of constraints consists of equations and natural conditions for non-negativity of variables. However, when compiling models of economic problems, constraints are mainly formed in the form of a system of inequalities, so it is necessary to be able to move from a system of inequalities to a system of equations.

This can be done as follows:Take the linear inequality a1x1 + a2x2 + ... + anxn≤b and add some value xn + 1 to its left side, such that the inequality turns into the equality a1x1 + a2x2 + ... + anxn + xn + 1 = b. Moreover, this value xn + 1 is non-negative.

Let's consider everything with an example.

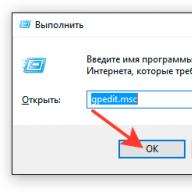

Example 26.1Bring the linear programming problem to the canonical form:

Decision:

Let us turn to the problem of finding the maximum of the objective function.

To do this, we change the signs of the objective function coefficients.

To transform the second and third inequalities of the system of constraints into equations, we introduce non-negative additional variables x4 x5 (on the mathematical model, this operation is marked with the letter D).

The variable x4 is introduced in the left-hand side of the second inequality with the "+" sign, since the inequality has the form "≤".

The variable x5 is introduced in the left side of the third inequality with the "-" sign, since the inequality has the form "≥".

Variables x4 x5 are entered into the objective function with a coefficient. equal to zero.

We write the task in the canonical form: